C05 | Human-Machine Interaction with Adaptive Multisensory Systems

User-adaptive systems are a recent trend in technological development. Designed to learn the characteristics of the user interacting with them, they change their own features to provide users with a targeted, personalized experience.

This project investigates human adaptive behavior in mutual-learning situations. A better understanding of adaptive human-machine interactions, and of human sensorimotor learning processes in particular, will provide guidelines, evaluation criteria, and recommendations that will be beneficial for all projects within SFB/Transregio 161 that focus on the design of user-adaptive systems and algorithms.

To achieve this goal, we will carry out behavioral experiments using human participants and base our empirical choices on the framework of optimal decision theory as derived from the Bayesian approach. This approach can be used as a tool to construct ideal observer models against which human performance can be compared.

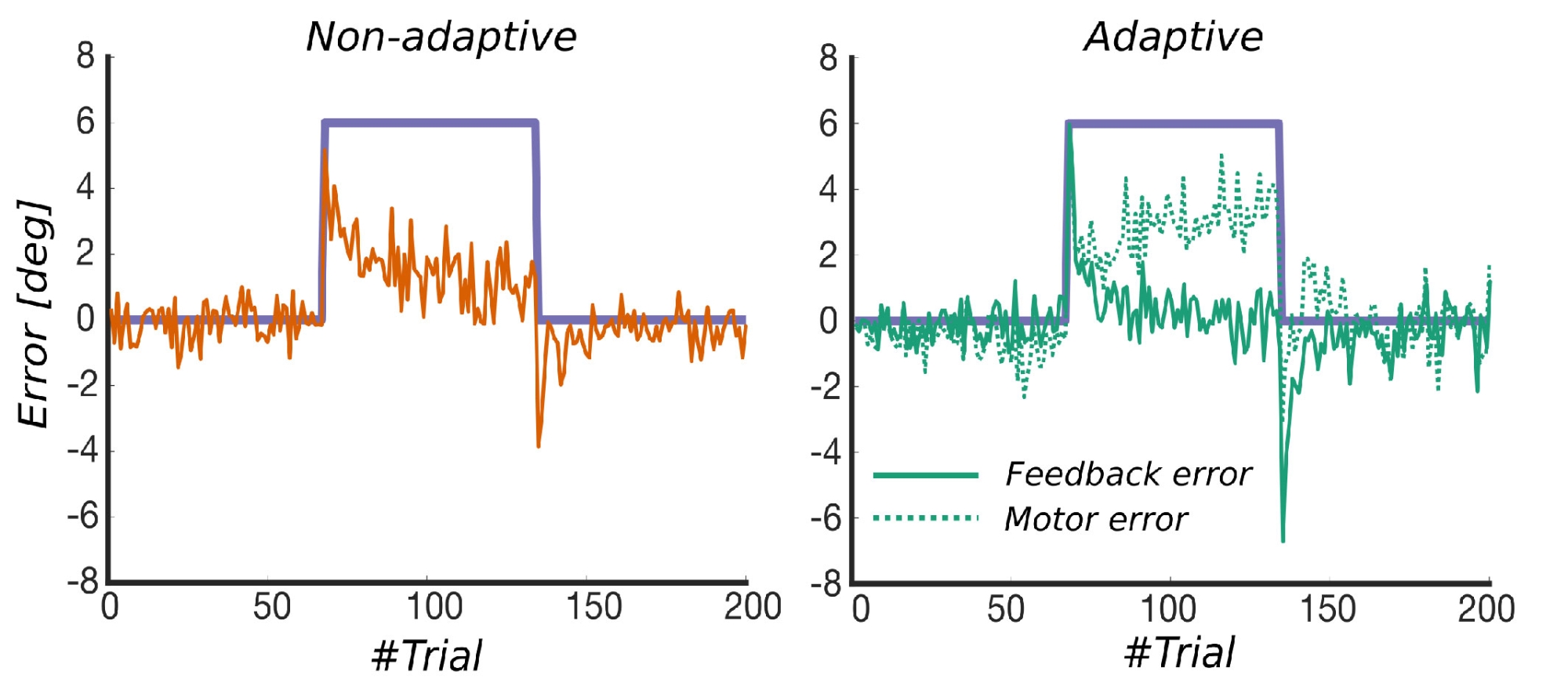

Previous studies have shown, that adaptive features can create side effects, which might be undesirable. In applications requiring the users to improve their skills, for example, these side effects could impede the human learn process (Fig.1).

Research Questions

What are the determinants of mutual adaptation between an adaptable user and a useradaptive system?

How does mutual adaptation change based on the sensory modalities involved?

Can mutual adaptation enhance immersion in an interactive virtual environment?

Do the interaction capabilities and experiences learnt in an adaptive system generalize to real-life, non-adaptive scenarios?

Fig.1: Median of errors. In the non-adaptive condition (left panel) the error-based algorithm is not implemented, therefore the pointing error is the same as the displayed feedback error. In the adaptive condition (right panel) the system acquires the subject´s pointing error (dashed line) and partially corrects for it in the displayed feedback error (solid line). The pointing error interestingly increases in the adaptive condition over time.

Publications

- M. Kurzweg, Y. Weiss, M. O. Ernst, A. Schmidt, and K. Wolf, “Survey on Haptic Feedback through Sensory Illusions in Interactive Systems,” ACM Comput. Surv., vol. 56, Art. no. 8, Apr. 2024, doi: 10.1145/3648353.

- W. Teramoto and M. O. Ernst, “Effects of invisible lip movements on phonetic perception,” Scientific Reports, vol. 13, Art. no. 1, 2023, doi: 10.1038/s41598-023-33791-y.

- F. Chiossi et al., “Adapting visualizations and interfaces to the user,” it - Information Technology, vol. 64, pp. 133–143, 2022, doi: 10.1515/itit-2022-0035.

- J. Zagermann et al., “Complementary Interfaces for Visual Computing,” it - Information Technology, vol. 64, pp. 145–154, 2022, doi: 10.1515/itit-2022-0031.

- P. Balestrucci, D. Wiebusch, and M. O. Ernst, “ReActLab: A Custom Framework for Sensorimotor Experiments “in-the-wild,”” Frontiers in Psychology, vol. 13, Jun. 2022, doi: 10.3389/fpsyg.2022.906643/full.

- P. Balestrucci, V. Maffei, F. Lacquaniti, and A. Moscatelli, “The Effects of Visual Parabolic Motion on the Subjective Vertical and on Interception,” Neuroscience, vol. 453, pp. 124–137, Jan. 2021, [Online]. Available: https://www.sciencedirect.com/science/article/abs/pii/S0306452220306424

- P. Balestrucci et al., “Pipelines Bent, Pipelines Broken: Interdisciplinary Self-Reflection on the Impact of COVID-19 on Current and Future Research (Position Paper),” in 2020 IEEE Workshop on Evaluation and Beyond-Methodological Approaches to Visualization (BELIV), IEEE, 2020, pp. 11–18. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9307759

- P. Balestrucci and M. O. Ernst, “Visuo-motor adaptation during interaction with a user-adaptive system,” Journal of Vision, vol. 19, p. 187a, Sep. 2019, [Online]. Available: https://jov.arvojournals.org/article.aspx?articleid=2750667

- T.-K. Machulla, L. L. Chuang, F. Kiss, M. O. Ernst, and A. Schmidt, “Sensory Amplification Through Crossmodal Stimulation,” in Proceedings of the CHI Workshop on Amplification and Augmentation of Human Perception, 2017.

- T. Waltemate et al., “The Impact of Latency on Perceptual Judgments and Motor Performance in Closed-loop Interaction in Virtual Reality,” in Proceedings of the ACM Conference on Virtual Reality Software and Technology (VRST), D. Kranzlmüller and G. Klinker, Eds., ACM, 2016, pp. 27–35. doi: 10.1145/2993369.2993381.

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.