A01 | Uncertainty Quantification and Analysis in Visualization

David Hägele, University of Stuttgart – Email | Website

Dr. Rebecca Kehlbeck, University of Konstanz – Email | Website

Patrick Paetzold, University of Konstanz – Email | Website

Michael Stroh, University of Konstanz – Email | Website

Sita Vriend, University of Stuttgart - Email | Website

Yumeng Xue, University of Konstanz, Email | Website

Our long-term goal is the modeling, handling, and quantification of uncertainty throughout the complete visual computing process, from visual-computing specific models of uncertainty to the visual representation of, and interaction with, uncertainty information. We aim to investigate models and methods for quantifying individual sources of uncertainty, the propagation of uncertainty through the visual computing pipeline, and the impact of uncertainty on the components of the visual computing process. Furthermore, we will advance the visualization and visual analytics of uncertainty, and investigate several different application examples.

Research Questions

How can we integrate and combine the different aspects of uncertainty in the various subareas of visual computing?

How can uncertainty be modeled and propagated through the different steps of the visualization process?

What are effective ways to communicate uncertainty to humans and how can they meaningfully interact with such displays?

Which methods are best suited to evaluate uncertainty visualization, and how can we quantify human understanding of uncertainty?

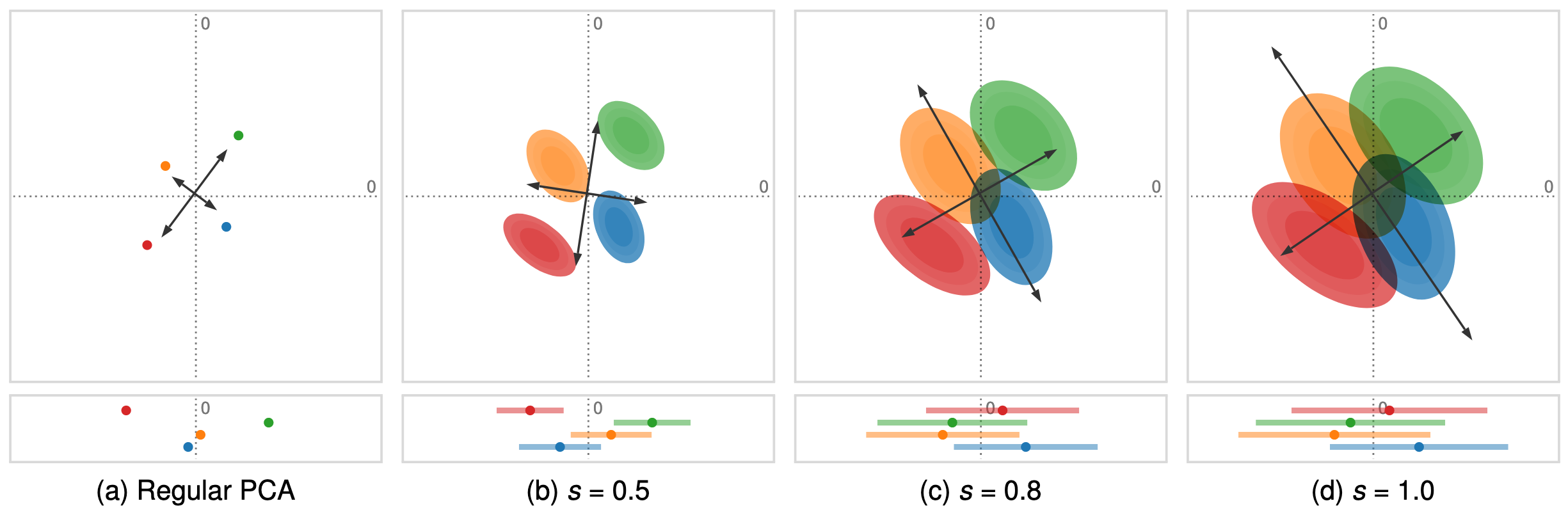

Fig. 1: Uncertainty-Aware Principal Component Analysis. The data consists of multivariate probability distributions, the plots shows the impact of different levels of variance.

Publications

- R. Bauer, M. Evers, Q. Q. Ngo, G. Reina, S. Frey, and M. Sedlmair, “Voronoi Cell Interface‐Based Parameter Sensitivity Analysis for Labeled Samples,” Computer Graphics Forum, May 2025, doi: 10.1111/cgf.70122.

- S. A. Vriend, S. Vidyapu, K.-T. Chen, and D. Weiskopf, “Which Experimental Design is Better Suited for VQA Tasks? Eye Tracking Study on Cognitive Load, Performance, and Gaze Allocations,” in Proceedings of the Symposium on Eye Tracking and Visualization (ETVIS), Jun. 2024. [Online]. Available: https://arxiv.org/abs/2404.04036

- Y. Xue et al., “Reducing Ambiguities in Line-Based Density Plots by Image-Space Colorization,” IEEE Transactions on Visualization & Computer Graphics, vol. 30, Art. no. 1, Jan. 2024, [Online]. Available: https://www.computer.org/csdl/journal/tg/2024/01/10297597/1RyY1MBMcIo

- T. Krake, D. Klötzl, D. Hägele, and D. Weiskopf, “Uncertainty-Aware Seasonal-Trend Decomposition Based on Loess,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–16, 2024, doi: 10.1109/tvcg.2024.3364388.

- P. Paetzold, D. Hägele, M. Evers, D. Weiskopf, and O. Deussen, “UADAPy: An Uncertainty-Aware Visualization and Analysis Toolbox.” IEEE, pp. 48–50, 2024. doi: 10.1109/uncertaintyvisualization63963.2024.00011.

- F. L. Dennig et al., “The Categorical Data Map: A Multidimensional Scaling-Based Approach,” in 2024 IEEE Visualization in Data Science (VDS), IEEE, 2024, pp. 25–34. doi: 10.1109/vds63897.2024.00008.

- N. Rodrigues, C. Schulz, S. Döring, D. Baumgartner, T. Krake, and D. Weiskopf, “Relaxed Dot Plots: Faithful Visualization of Samples and Their Distribution,” IEEE Transactions on Visualization and Computer Graphics, vol. 29, Art. no. 1, Jan. 2023, doi: 10.1109/TVCG.2022.3209429.

- P. Paetzold, R. Kehlbeck, H. Strobelt, Y. Xue, S. Storandt, and O. Deussen, “RectEuler: Visualizing Intersecting Sets using Rectangles,” Computer Graphics Forum, vol. 42, Art. no. 3, 2023, doi: 10.1111/cgf.14814.

- M. Xue et al., “Taurus: Towards a Unified Force Representation and Universal Solver for Graph Layout,” IEEE Transactions on Visualization and Computer Graphics, vol. 29, Art. no. 1, 2023, doi: 10.1109/TVCG.2022.3209371.

- J. Görtler et al., “Neo: Generalizing Confusion Matrix Visualization to Hierarchical and Multi-Output Labels,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, in CHI ’22. New York, NY, USA: Association for Computing Machinery, 2022, pp. 1–13. doi: 10.1145/3491102.3501823.

- R. Kehlbeck, J. Görtler, Y. Wang, and O. Deussen, “SPEULER: Semantics-preserving Euler Diagrams,” IEEE Transactions on Visualization and Computer Graphics, vol. 28, Art. no. 1, 2022, [Online]. Available: https://www.computer.org/csdl/journal/tg/2022/01/09552459/1xibZ9AqsLu

- D. Hägele, T. Krake, and D. Weiskopf, “Uncertainty-Aware Multidimensional Scaling,” IEEE Transactions on Visualization and Computer Graphics, vol. 29, Art. no. 1, 2022, doi: 10.1109/TVCG.2022.3209420.

- F. Schreiber and D. Weiskopf, “Quantitative Visual Computing,” it - Information Technology, vol. 64, pp. 119–120, 2022, doi: 10.1515/itit-2022-0048.

- D. Hägele et al., “Uncertainty Visualization: Fundamentals and Recent Developments,” it - Information Technology, vol. 64, pp. 121–132, 2022, doi: 10.1515/itit-2022-0033.

- Y. Wang, M. Koch, M. Bâce, D. Weiskopf, and A. Bulling, “Impact of Gaze Uncertainty on AOIs in Information Visualisations,” in 2022 Symposium on Eye Tracking Research and Applications, ACM, Jun. 2022, pp. 1–6. doi: 10.1145/3517031.3531166.

- S. Dosdall, K. Angerbauer, L. Merino, M. Sedlmair, and D. Weiskopf, “Toward In-Situ Authoring of Situated Visualization with Chorded Keyboards,” in 15th International Symposium on Visual Information Communication and Interaction, VINCI 2022, Chur, Switzerland, August 16-18, 2022, M. Burch, G. Wallner, and D. Limberger, Eds., ACM, Aug. 2022, pp. 1–5. doi: 10.1145/3554944.3554970.

- Y. Zhang, K. Klein, O. Deussen, T. Gutschlag, and S. Storandt, “Robust Visualization of Trajectory Data,” it - Information Technology, vol. 64, pp. 181–191, 2022, doi: 10.1515/itit-2022-0036.

- D. Weiskopf, “Uncertainty Visualization: Concepts, Methods, and Applications in Biological Data Visualization,” Frontiers in Bioinformatics, vol. 2, 2022, doi: 10.3389/fbinf.2022.793819.

- T. Müller, C. Schulz, and D. Weiskopf, “Adaptive Polygon Rendering for Interactive Visualization in the Schwarzschild Spacetime,” European Journal of Physics, vol. 43, Art. no. 1, 2021, doi: 10.1088/1361-6404/ac2b36/meta.

- K. Schatz et al., “2019 IEEE Scientific Visualization Contest Winner: Visual Analysis of Structure Formation in Cosmic Evolution,” IEEE Computer Graphics and Applications, vol. 41, Art. no. 6, 2021, doi: 10.1109/MCG.2020.3004613.

- K. Gadhave et al., “Predicting intent behind selections in scatterplot visualizations,” Information Visualization, vol. 20, Art. no. 4, 2021, doi: 10.1177/14738716211038604.

- C. Schulz et al., “Multi-Class Inverted Stippling,” ACM Trans. Graph., vol. 40, Art. no. 6, Dec. 2021, doi: 10.1145/3478513.3480534.

- P. Balestrucci et al., “Pipelines Bent, Pipelines Broken: Interdisciplinary Self-Reflection on the Impact of COVID-19 on Current and Future Research (Position Paper),” in 2020 IEEE Workshop on Evaluation and Beyond-Methodological Approaches to Visualization (BELIV), IEEE, 2020, pp. 11–18. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9307759

- N. Brich et al., “Visual Analysis of Multivariate Intensive Care Surveillance Data,” in Eurographics Workshop on Visual Computing for Biology and Medicine, B. Kozlíková, M. Krone, N. Smit, K. Nieselt, and R. G. Raidou, Eds., The Eurographics Association, 2020.

- M. Sondag, W. Meulemans, C. Schulz, K. Verbeek, D. Weiskopf, and B. Speckmann, “Uncertainty Treemaps,” in Proceedings of the IEEE Pacific Visualization Symposium (PacificVis), 2020, pp. 111–120. [Online]. Available: https://ieeexplore.ieee.org/document/9086235

- N. Rodrigues, C. Schulz, A. Lhuillier, and D. Weiskopf, “Cluster-Flow Parallel Coordinates: Tracing Clusters Across Subspaces,” in Proceedings of the Graphics Interface Conference (GI) (forthcoming), Canadian Human-Computer Communications Society / Société canadienne du dialogue humain-machine, 2020, pp. 0:1–0:11. doi: 10.20380/GI2020.38.

- J. Görtler, M. Spicker, C. Schulz, D. Weiskopf, and O. Deussen, “Stippling of 2D Scalar Fields,” IEEE Transactions on Visualization and Computer Graphics, vol. 25, Art. no. 6, 2019, [Online]. Available: https://ieeexplore.ieee.org/document/8667696

- Y. Wang, Z. Wang, C.-W. Fu, H. Schmauder, O. Deussen, and D. Weiskopf, “Image-Based Aspect Ratio Selection.,” IEEE Transactions on Visualization and Computer Graphics, vol. 25, Art. no. 1, 2019, [Online]. Available: https://ieeexplore.ieee.org/document/8440843

- V. Bruder, C. Schulz, R. Bauer, S. Frey, D. Weiskopf, and T. Ertl, “Voronoi-Based Foveated Volume Rendering,” in Proceedings of the Eurographics Conference on Visualization - Short Papers (EuroVis), J. Johansson, F. Sadlo, and G. E. Marai, Eds., Eurographics Association, 2019, pp. 67–71. doi: 10.2312/evs.20191172.

- K. Schatz et al., “Visual Analysis of Structure Formation in Cosmic Evolution,” in Proceedings of the IEEE Scientific Visualization Conference (SciVis), 2019, pp. 33–41. doi: 10.1109/scivis47405.2019.8968855.

- C. Schulz et al., “A Framework for Pervasive Visual Deficiency Simulation,” in Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (VR), 2019, pp. 1852–1857. [Online]. Available: https://ieeexplore.ieee.org/document/9044164

- J. Görtler, R. Kehlbeck, and O. Deussen, “A Visual Exploration of Gaussian Processes,” in Proceedings of the Workshop on Visualization for AI Explainability (VISxAI), 2018. [Online]. Available: https://distill.pub/2019/visual-exploration-gaussian-processes/

- C. Schulz, K. Schatz, M. Krone, M. Braun, T. Ertl, and D. Weiskopf, “Uncertainty Visualization for Secondary Structures of Proteins,” in Proceedings of the IEEE Pacific Visualization Symposium (PacificVis), IEEE, 2018, pp. 96–105. [Online]. Available: https://ieeexplore.ieee.org/document/8365980

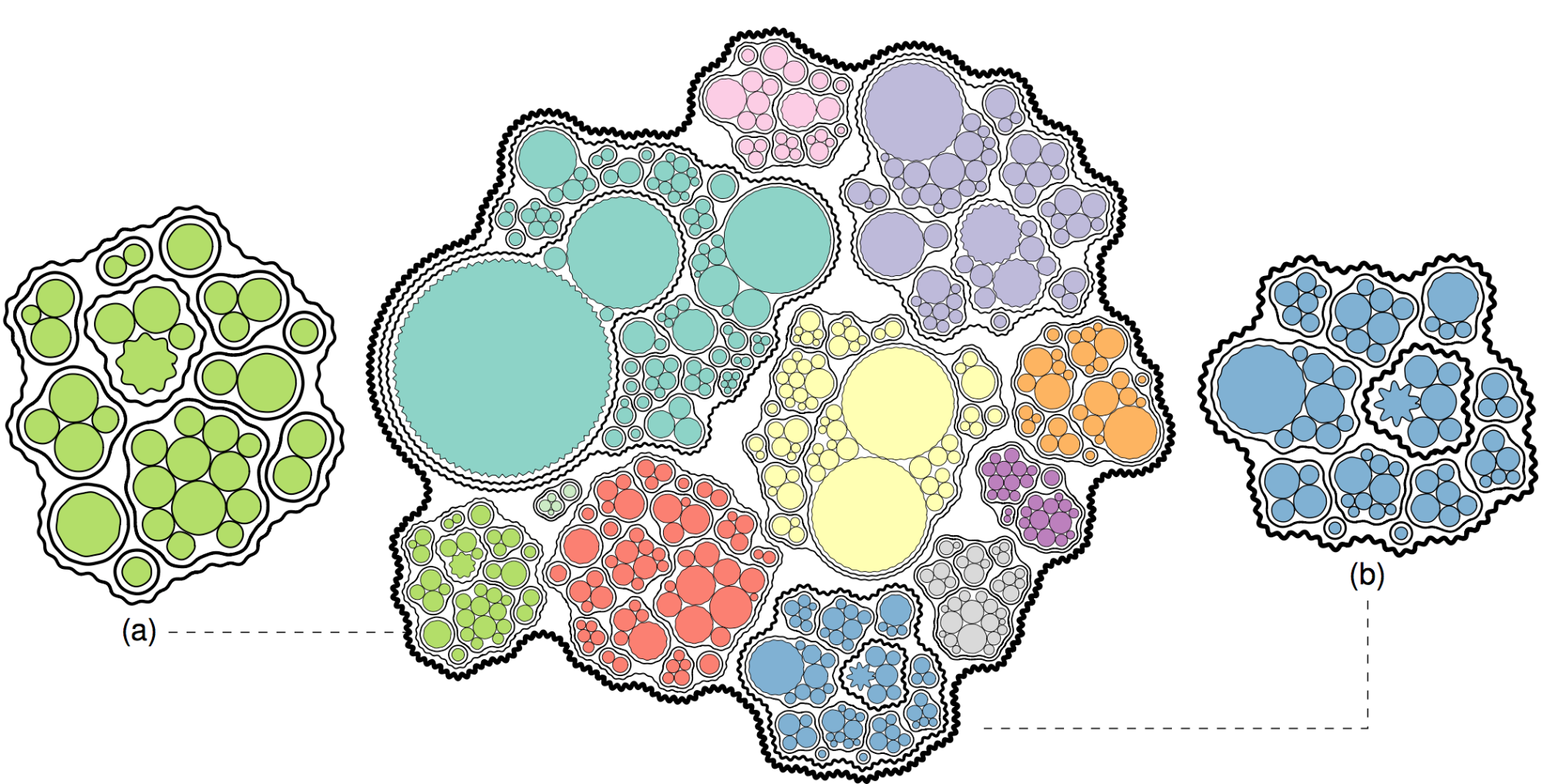

- J. Görtler, C. Schulz, O. Deussen, and D. Weiskopf, “Bubble Treemaps for Uncertainty Visualization,” IEEE Transactions on Visualization and Computer Graphics, vol. 24, Art. no. 1, 2018, doi: 10.1109/TVCG.2017.2743959.

- T. Spinner, J. Körner, J. Görtler, and O. Deussen, “Towards an Interpretable Latent Space: An Intuitive Comparison of Autoencoders with Variational Autoencoders,” in Proceedings of the Workshop on Visualization for AI Explainability (VISxAI), IEEE VIS, 2018. [Online]. Available: https://thilospinner.com/towards-an-interpretable-latent-space/

- C. Schulz, A. Zeyfang, M. van Garderen, H. Ben Lahmar, M. Herschel, and D. Weiskopf, “Simultaneous Visual Analysis of Multiple Software Hierarchies,” in Proceedings of the IEEE Working Conference on Software Visualization (VISSOFT), IEEE, 2018, pp. 87–95. [Online]. Available: https://ieeexplore.ieee.org/document/8530134/

- P. Gralka, C. Schulz, G. Reina, D. Weiskopf, and T. Ertl, “Visual Exploration of Memory Traces and Call Stacks,” in Proceedings of the IEEE Working Conference on Software Visualization (VISSOFT), IEEE, 2017, pp. 54–63. doi: 10.1109/VISSOFT.2017.15.

- K. Srulijes et al., “Visualization of Eye-Head Coordination While Walking in Healthy Subjects and Patients with Neurodegenerative Diseases,” in Poster (reviewed) presented on Symposium of the International Society of Posture and Gait Research (ISPGR), 2017.

- C. Schulz, M. Burch, F. Beck, and D. Weiskopf, “Visual Data Cleansing of Low-Level Eye Tracking Data,” in Eye Tracking and Visualization: Foundations, Techniques, and Applications. ETVIS 2015, M. Burch, L. L. Chuang, B. Fisher, A. Schmidt, and D. Weiskopf, Eds., Springer International Publishing, 2017, pp. 199–216. doi: 10.1007/978-3-319-47024-5_12.

- C. Schulz, N. Rodrigues, K. Damarla, A. Henicke, and D. Weiskopf, “Visual Exploration of Mainframe Workloads,” in Proceedings of the SIGGRAPH Asia Symposium on Visualization, ACM, 2017, pp. 4:1–4:7. doi: 10.1145/3139295.3139312.

- C. Schulz, A. Nocaj, J. Görtler, O. Deussen, U. Brandes, and D. Weiskopf, “Probabilistic Graph Layout for Uncertain Network Visualization,” IEEE Transactions on Visualization and Computer Graphics, vol. 23, Art. no. 1, 2017, doi: 10.1109/TVCG.2016.2598919.

- K. Kurzhals, B. Fisher, M. Burch, and D. Weiskopf, “Eye Tracking Evaluation of Visual Analytics,” Information Visualization, vol. 15, Art. no. 4, 2016, doi: 10.1177/1473871615609787.

- D. Weiskopf, M. Burch, L. L. Chuang, B. Fischer, and A. Schmidt, Eye Tracking and Visualization: Foundations, Techniques, and Applications. Berlin, Heidelberg: Springer, 2016. [Online]. Available: https://www.springer.com/de/book/9783319470238

- C. Schulz et al., “Generative Data Models for Validation and Evaluation of Visualization Techniques,” in Proceedings of the Workshop on Beyond Time and Errors: Novel Evaluation Methods for Visualization (BELIV), ACM, 2016, pp. 112–124. doi: 10.1145/2993901.2993907.

- K. Kurzhals, M. Hlawatsch, M. Burch, and D. Weiskopf, “Fixation-Image Charts,” in Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA), ACM, Ed., ACM, 2016, pp. 11–18. doi: 10.1145/2857491.2857507.

- T. Blascheck, F. Beck, S. Baltes, T. Ertl, and D. Weiskopf, “Visual analysis and coding of data-rich user behavior,” in IEEE Conference on Visual Analytics Science and Technology, IEEE, 2016, pp. 141–150. doi: 10.1109/vast.2016.7883520.

- C. Schulz, M. Burch, and D. Weiskopf, “Visual Data Cleansing of Eye Tracking Data,” in Proceedings of the Symposium on Eye Tracking and Visualization (ETVIS), 2015. [Online]. Available: http://etvis.visus.uni-stuttgart.de/etvis2015/papers/etvis15_schulz.pdf

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.