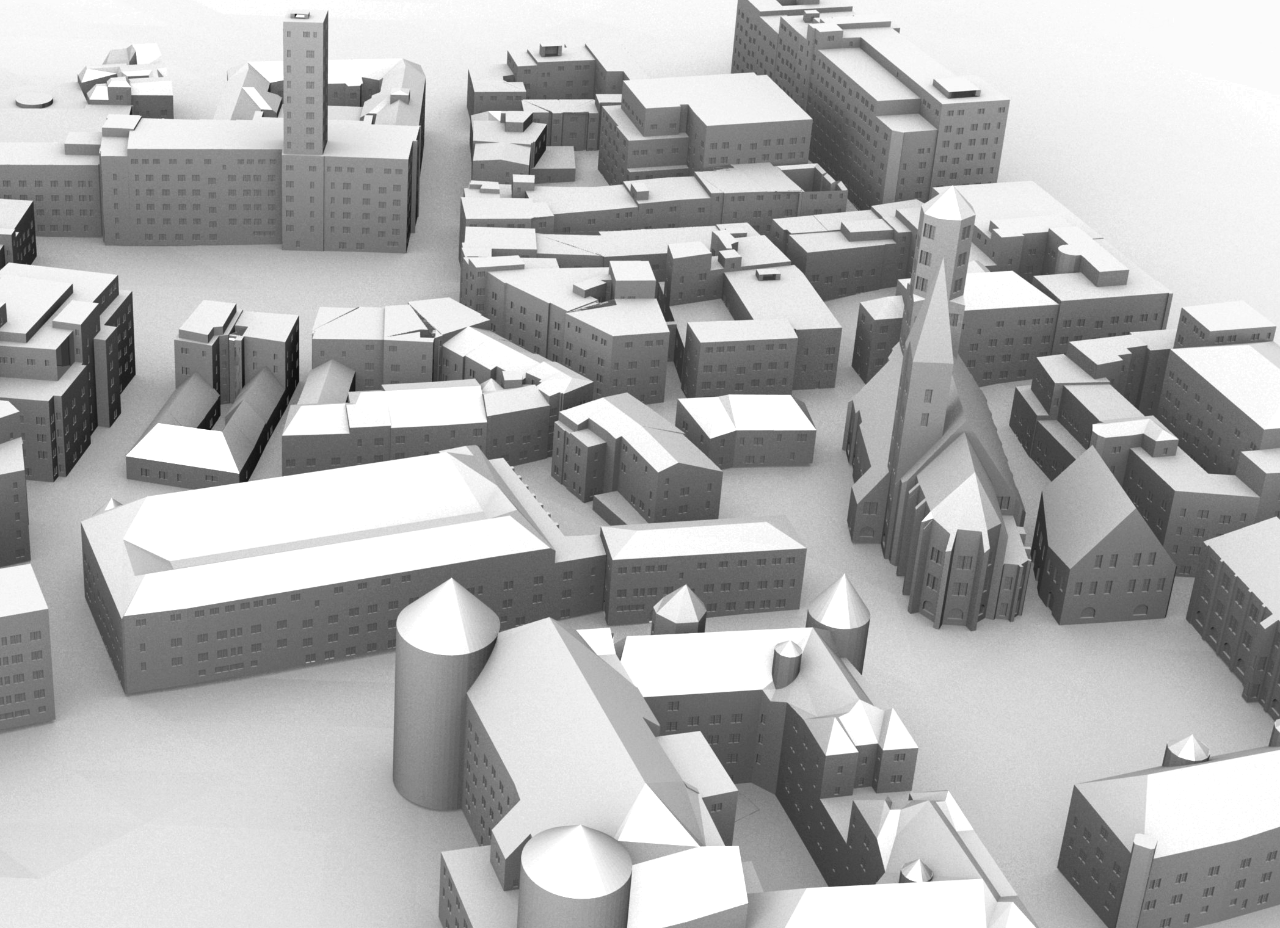

D01 | Perception-Guided Adaptive Modeling of 3D Virtual Cities Based on Probabilistic Grammars

The goal of the project is to create quantitative methods for the perception-aware representation of 3D virtual cities. We will develop a grammar-based system for the effective visual communication of building-related information via geometric 3D building representations that induce a defined degree of perceptual insight in the observer’s mind. The system will allow us to quantify the perceptual insight and predict the effects of modified system parameters. Moreover, it will be possible to enhance the perceptual insight by specifically changing the geometric representation.

Research Questions

Which geometric 3D representation is, given a context, best suited to enable a maximum understanding of the information that is intended to be transmitted?

Which aspects or properties of geometric 3D building representations are relevant for the perception of building-related semantic information?

How can we formalize and use this knowledge to develop a perception-aware modeling concept?

How can we quantify the quality of 3D building representations in a way that fits the viewer’s perception?

Publications

- D. Laupheimer, P. Tutzauer, N. Haala, and M. Spicker, “Neural Networks for the Classification of Building Use from Street-view Imagery,” ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, pp. 177–184, 2018, [Online]. Available: https://www.isprs-ann-photogramm-remote-sens-spatial-inf-sci.net/IV-2/177/2018/isprs-annals-IV-2-177-2018.pdf

- P. Tutzauer and N. Haala, “Processing of Crawled Urban Imagery for Building Use Classification,” ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, pp. 143–149, 2017, doi: 10.5194/isprs-archives-XLII-1-W1-143-2017.

- D. Fritsch and M. Klein, “3D and 4D Modeling for AR and VR App Developments,” in Proceedings of the International Conference on Virtual System & Multimedia (VSMM), 2017, pp. 1–8. [Online]. Available: https://ieeexplore.ieee.org/document/8346270

- D. Fritsch, “Photogrammetrische Auswertung digitaler Bilder – Neue Methoden der Kamerakalibration, dichten Bildzuordnung und Interpretation von Punktwolken,” in Photogrammetrie und Fernerkundung, C. Heipke, Ed., in Springer Reference Naturwissenschaften (SRN). , Springer Spektrum, 2017, pp. 157–196. doi: 10.1007/978-3-662-47094-7_41.

- P. Tutzauer, S. Becker, and N. Haala, “Perceptual Rules for Building Enhancements in 3d Virtual Worlds,” i-com, vol. 16, Art. no. 3, 2017, doi: 10.1515/icom-2017-0022.

- P. Tutzauer, S. Becker, D. Fritsch, T. Niese, and O. Deussen, “A Study of the Human Comprehension of Building Categories Based on Different 3D Building Representations,” Photogrammetrie - Fernerkundung - Geoinformation, vol. 2016, pp. 319–333, 2016, doi: 10.1127/pfg/2016/0302.

- P. Tutzauer, S. Becker, T. Niese, O. Deussen, and D. Fritsch, “Understanding Human Perception of Building Categories in Virtual 3d Cities - a User Study,” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences (ISPRS), pp. 683–687, 2016, [Online]. Available: https://www.int-arch-photogramm-remote-sens-spatial-inf-sci.net/XLI-B2/683/2016/isprs-archives-XLI-B2-683-2016.pdf

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.