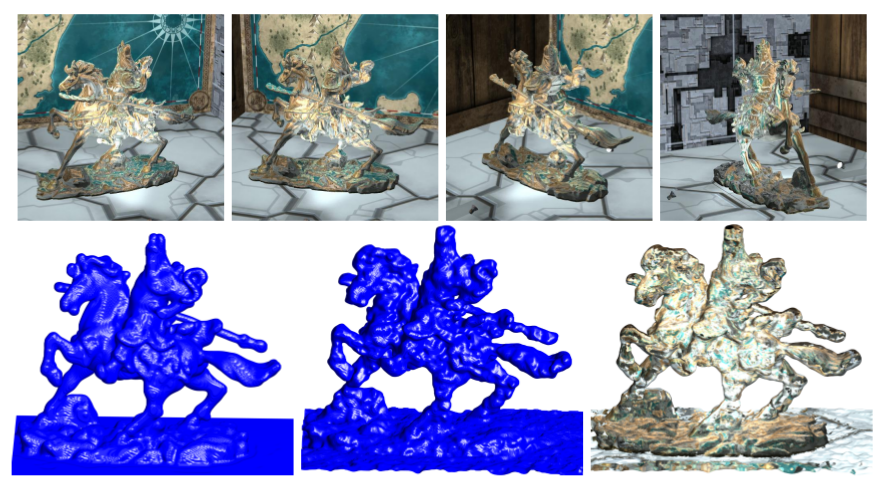

B05 | Efficient Large Scale Variational 3D Reconstruction

The central goal of the project is to research and develop high-performance variational methods for large scale 3D reconstruction problems, which are general and accurate while meeting computation time constraints imposed by visual computing applications. Key abilities will be that

- a variety of possible sources of 3D information can be integrated,

- accuracy can be locally improved at the cost of run-time, and

- for a given allowed total run-time, global accuracy is maximized according to application-specific metrics.

Research Questions

What is the best geometric representation to implement locally adaptive geometry optimization?

Which variational models efficiently allow local refinement of the scene structure in a mathematically consistent manner?

Which models in shape reconstruction best translate to the adaptive shape representation?

How can the local refinement of surface properties (e.g., texture) be modeled and optimized—ideally together with the geometry?

What are natural, ideally convex priors on adaptive grids?

What are optimal local accuracy measures for different applications?

How can an ideal trade-off between accuracy and run-time be determined?

Publications

- F. Petersen, B. Goldluecke, O. Deussen, and H. Kuehne, “Style Agnostic 3D Reconstruction via Adversarial Style Transfer,” in 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), IEEE, Jan. 2022, pp. 2273–2282. [Online]. Available: http://dblp.uni-trier.de/db/conf/wacv/wacv2022.html#PetersenGDK22

- F. Petersen, B. Goldluecke, C. Borgelt, and O. Deussen, “GenDR: A Generalized Differentiable Renderer,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 3992–4001. doi: 10.1109/CVPR52688.2022.00397.

- S. Giebenhain and B. Goldlücke, “AIR-Nets: An Attention-Based Framework for Locally Conditioned Implicit Representations,” in 2021 International Conference on 3D Vision (3DV), 2021, pp. 1054–1064. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9665836

- K. Kurzhals et al., “Visual Analytics and Annotation of Pervasive Eye Tracking Video,” in Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA), ACM, 2020, pp. 16:1–16:9. doi: 10.1145/3379155.3391326.

- V. Hosu, B. Goldlücke, and D. Saupe, “Effective Aesthetics Prediction with Multi-level Spatially Pooled Features,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9367–9375, 2019, [Online]. Available: https://ieeexplore.ieee.org/document/8953497

- D. Maurer, N. Marniok, B. Goldluecke, and A. Bruhn, “Structure-from-motion-aware PatchMatch for Adaptive Optical Flow Estimation,” in Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science, vol. 11212, V. Ferrari, M. Hebert, C. Sminchisescu, and Y. Weiss, Eds., Springer International Publishing, 2018, pp. 575–592. doi: 10.1007/978-3-030-01237-3_35.

- N. Marniok and B. Goldluecke, “Real-time Variational Range Image Fusion and Visualization for Large-scale Scenes using GPU Hash Tables,” in Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), 2018, pp. 912–920. [Online]. Available: https://ieeexplore.ieee.org/document/8354209

- N. Marniok, O. Johannsen, and B. Goldluecke, “An Efficient Octree Design for Local Variational Range Image Fusion,” in Pattern Recognition. GCPR 2017. Lecture Notes in Computer Science, vol. 10496, V. Roth and T. Vetter, Eds., in Lecture Notes in Computer Science, vol. 10496. , Springer International Publishing, 2017, pp. 401–412. doi: 10.1007/978-3-319-66709-6_32.

- M. Stein et al., “Bring it to the Pitch: Combining Video and Movement Data to Enhance Team Sport Analysis,” in IEEE Transactions on Visualization and Computer Graphics, 2017, pp. 13–22. [Online]. Available: https://ieeexplore.ieee.org/document/8019849

- O. Johannsen et al., “A Taxonomy and Evaluation of Dense Light Field Depth Estimation Algorithms,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Workshops, IEEE, 2017, pp. 1795–1812. [Online]. Available: https://ieeexplore.ieee.org/document/8014960

- O. Johannsen, A. Sulc, N. Marniok, and B. Goldluecke, “Layered Scene Reconstruction from Multiple Light Field Camera Views,” in Computer Vision – ACCV 2016. ACCV 2016. Lecture Notes in Computer Science, vol. 10113, S.-H. Lai, V. Lepetit, K. Nishino, and Y. Sato, Eds., Springer International Publishing, 2016, pp. 3–18. doi: 10.1007/978-3-319-54187-7_1.

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.