A04 | Quantitative Models for Visual Abstraction

We aim at finding abstraction methods for visual computing that create graphical representations for given data with a quantitatively determined degree of abstraction. Appropriate abstraction styles will be selected and representations will be developed that allow us to technically quantify the visual representation (e.g. the number of graphical elements used for a representation). In a second step, we will develop methods that perform abstraction also in a perceptually linear way.

This project will provide visual abstraction methods for other projects of the Collaborative Research Center.

Research questions

Can we measure the degree of abstraction of a non-photorealistic rendering?

Can we create abstraction methods with coherence between created abstract representations?

Is it possible to parametrize different abstraction styles in a technically linear way?

Is the parametrization also adjustable in a perceptually linear way?

Publications

- T. Ge et al., “Optimally Ordered Orthogonal Neighbor Joining Trees for Hierarchical Cluster Analysis,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–13, 2023, [Online]. Available: https://ieeexplore.ieee.org/document/10147241

- F. Petersen, B. Goldluecke, O. Deussen, and H. Kuehne, “Style Agnostic 3D Reconstruction via Adversarial Style Transfer,” in 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), IEEE, Jan. 2022, pp. 2273–2282. [Online]. Available: http://dblp.uni-trier.de/db/conf/wacv/wacv2022.html#PetersenGDK22

- F. Petersen, B. Goldluecke, C. Borgelt, and O. Deussen, “GenDR: A Generalized Differentiable Renderer,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), 2022, pp. 3992–4001. doi: 10.1109/CVPR52688.2022.00397.

- C. Bu et al., “SineStream: Improving the Readability of Streamgraphs by Minimizing Sine Illusion Effects,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, Art. no. 2, 2021, [Online]. Available: https://ieeexplore.ieee.org/document/9222035

- K. Lu et al., “Palettailor: Discriminable Colorization for Categorical Data,” IEEE Transactions on Visualization & Computer Graphics, vol. 27, Art. no. 2, Feb. 2021, [Online]. Available: https://ieeexplore.ieee.org/document/9222351

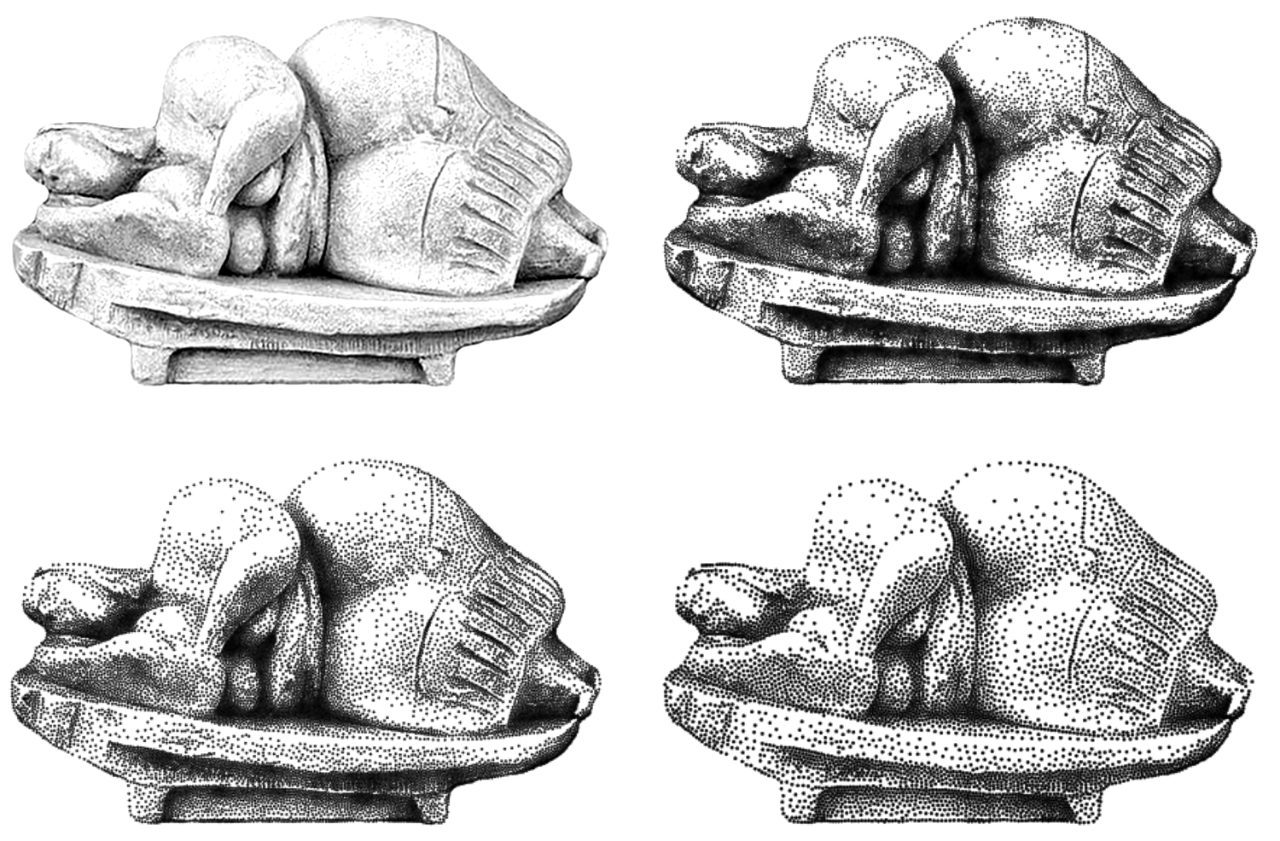

- C. Schulz et al., “Multi-Class Inverted Stippling,” ACM Trans. Graph., vol. 40, Art. no. 6, Dec. 2021, doi: 10.1145/3478513.3480534.

- K. C. Kwan and H. Fu, “Automatic Image Checkpoint Selection for Guider-Follower Pedestrian Navigation,” Computer Graphics Forum, vol. 40, Art. no. 1, 2021, doi: 10.1111/cgf.14192.

- Y. Chen, K. C. Kwan, L.-Y. Wei, and H. Fu, “Autocomplete Repetitive Stroking with Image Guidance,” in SIGGRAPH Asia 2021 Technical Communications, in SA ’21 Technical Communications. New York, NY, USA: Association for Computing Machinery, 2021. doi: 10.1145/3478512.3488595.

- D. Laupheimer, P. Tutzauer, N. Haala, and M. Spicker, “Neural Networks for the Classification of Building Use from Street-view Imagery,” ISPRS Annals of Photogrammetry, Remote Sensing and Spatial Information Sciences, pp. 177–184, 2018, [Online]. Available: https://www.isprs-ann-photogramm-remote-sens-spatial-inf-sci.net/IV-2/177/2018/isprs-annals-IV-2-177-2018.pdf

- O. Deussen, M. Spicker, and Q. Zheng, “Weighted Linde-Buzo-Gray Stippling,” ACM Transactions on Graphics, vol. 36, Art. no. 6, Nov. 2017, doi: 10.1145/3130800.3130819.

- M. Spicker, F. Hahn, T. Lindemeier, D. Saupe, and O. Deussen, “Quantifying Visual Abstraction Quality for Stipple Drawings,” in Proceedings of the Symposium on Non-Photorealistic Animation and Rendering (NPAR), ACM, Ed., Association for Computing Machinery, 2017, pp. 8:1–8:10. doi: 10.1145/3092919.3092923.

- J. Kratt, F. Eisenkeil, M. Spicker, Y. Wang, D. Weiskopf, and O. Deussen, “Structure-aware Stylization of Mountainous Terrains,” in Vision, Modeling & Visualization, M. Hullin, R. Klein, T. Schultz, and A. Yao, Eds., The Eurographics Association, 2017. doi: 10.2312/vmv20171255.

- P. Tutzauer, S. Becker, T. Niese, O. Deussen, and D. Fritsch, “Understanding Human Perception of Building Categories in Virtual 3d Cities - a User Study,” The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences (ISPRS), pp. 683–687, 2016, [Online]. Available: https://www.int-arch-photogramm-remote-sens-spatial-inf-sci.net/XLI-B2/683/2016/isprs-archives-XLI-B2-683-2016.pdf

- M. Spicker, J. Kratt, D. Arellano, and O. Deussen, “Depth-aware Coherent Line Drawings,” in Proceedings of the SIGGRAPH Asia Symposium on Computer Graphics and Interactive Techniques, Technical Briefs, ACM, 2015, pp. 1:1–1:5. doi: 10.1145/2820903.2820909.

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.