A08 | Learning and Explaining Dimensionality Reduction through Visualization

In recent years, machine learning has gained much attention for its ability to model complex human tasks, such as driving cars or composing music. In visualization research, there is currently a large effort to investigate how visualization can support machine learning research and practice.

In this project, we will take the reversed perspective and investigate how machine learning can support visualization research and practice. In particular, we will leverage machine learning to build and evaluate a new generation of models for visual perception and design.

Visualizing data is a process that involves many delicate design choices: How should the data be aggregated? Which visual encoding should be used? And how should it be parametrized?

In oder to make good design choices, many alternatives to aggregate and represent the data need to be evaluated. To make the work with the data more effective and easier, the project pursues several goals.

Goals

Novel models for visual perception and design decisions.

A new user-oriented research methodology.

Evaluating and characterizing the methodology.

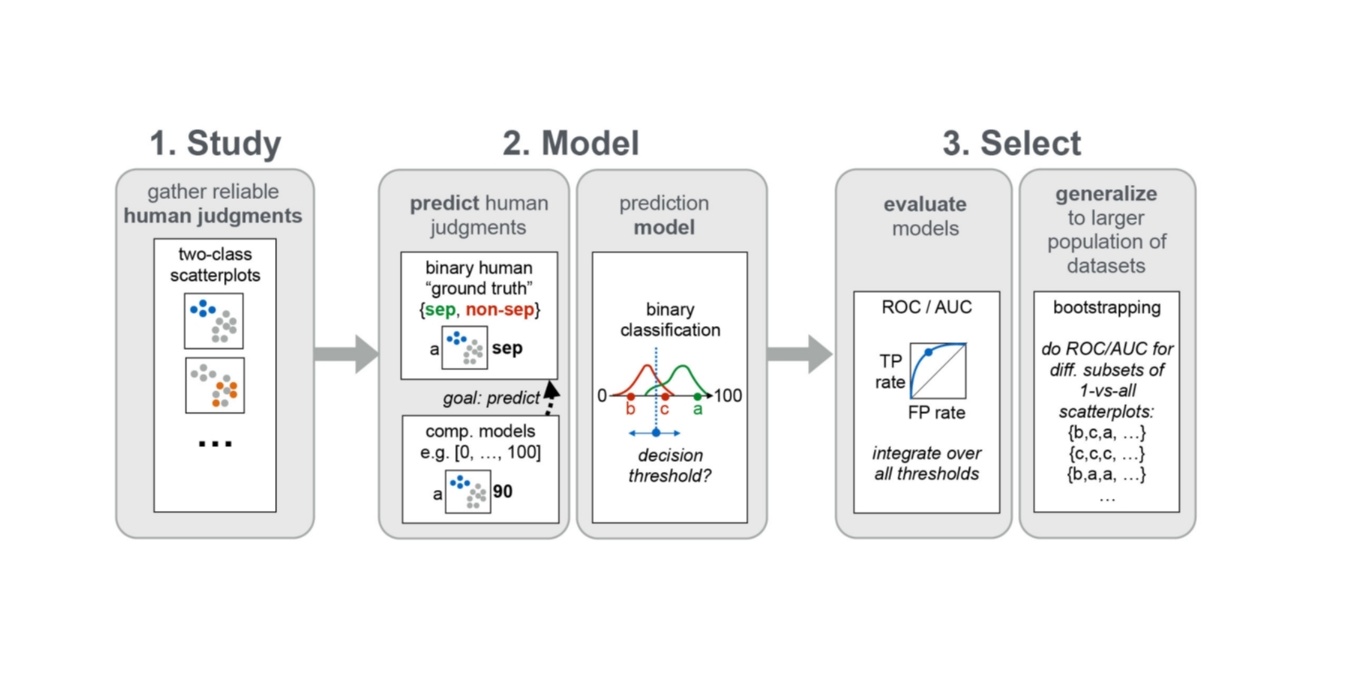

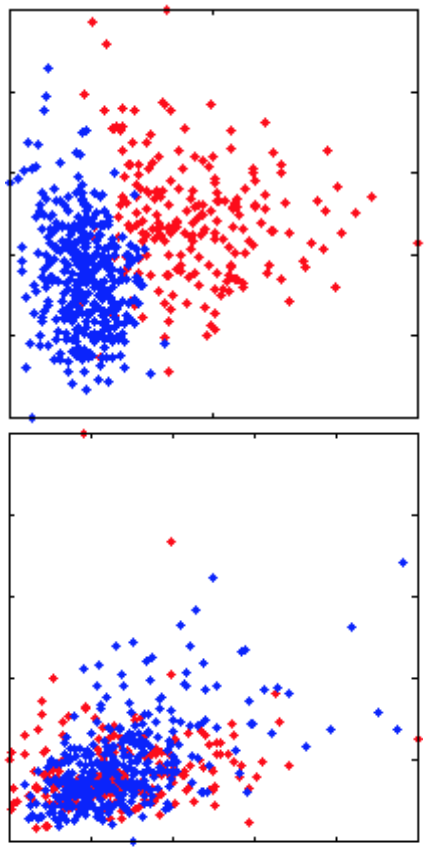

Fig.1: Illustration of the proposed learning-based methology using class seperation as an example. This novel user-oriented testing methodology will help us in bridging quantitative and qualitative methodes.

Publications

- R. Bauer, M. Evers, Q. Q. Ngo, G. Reina, S. Frey, and M. Sedlmair, “Voronoi Cell Interface‐Based Parameter Sensitivity Analysis for Labeled Samples,” Computer Graphics Forum, May 2025, doi: 10.1111/cgf.70122.

- L. Xiao et al., “A Systematic Review of Ability-diverse Collaboration through Ability-based Lens in HCI,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, New York, NY, USA: ACM, 2024, pp. 1–21. doi: 10.1145/3613904.3641930.

- K. Angerbauer et al., “Is it Part of Me? Exploring Experiences of Inclusive Avatar Use For Visible and Invisible Disabilities in Social VR,” in The 26th International ACM SIGACCESS Conference on Computers and Accessibility, New York, NY, USA: ACM, 2024, pp. 1–15. doi: 10.1145/3663548.3675601.

- M. M. Hamza, E. Ullah, A. Baggag, H. Bensmail, M. Sedlmair, and M. Aupetit, “ClustML: A measure of cluster pattern complexity in scatterplots learnt from human-labeled groupings,” Information Visualization, vol. 23, Art. no. 2, 2024, doi: 10.1177/14738716231220536.

- M. Jenadeleh et al., “An Image Quality Dataset with Triplet Comparisons for Multi-dimensional Scaling.” IEEE, pp. 278–281, 2024. doi: 10.1109/qomex61742.2024.10598258.

- C. Morariu, A. Bibal, R. Cutura, B. Frénay, and M. Sedlmair, “Predicting User Preferences of Dimensionality Reduction Embedding Quality,” IEEE Transactions on Visualization and Computer Graphics, vol. 29, Art. no. 1, 2023, [Online]. Available: https://ieeexplore.ieee.org/document/9904619

- T. Ge et al., “Optimally Ordered Orthogonal Neighbor Joining Trees for Hierarchical Cluster Analysis,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–13, 2023, [Online]. Available: https://ieeexplore.ieee.org/document/10147241

- F. Heyen, Q. Q. Ngo, and M. Sedlmair, “Visual Overviews for Sheet Music Structure,” in Proceedings of the 24th International Society for Music Information Retrieval Conference (ISMIR) 2023, ISMIR, Dec. 2023, pp. 692–699. doi: 10.5281/zenodo.10265383.

- N. Doerr, K. Angerbauer, M. Reinelt, and M. Sedlmair, “Bees, Birds and Butterflies: Investigating the Influence of Distractors on Visual Attention Guidance Techniques,” in Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, in CHI EA ’23. New York, NY, USA: Association for Computing Machinery, 2023. doi: 10.1145/3544549.3585816.

- R. Bauer et al., “Visual Ensemble Analysis of Fluid Flow in Porous Media across Simulation Codes and Experiment,” Transport in Porous Media, 2023, doi: 10.1007/s11242-023-02019-y#citeas.

- K.-T. Chen et al., “Reading Strategies for Graph Visualizations That Wrap Around in Torus Topology,” in Proceedings of the 2023 Symposium on Eye Tracking Research and Applications, in ETRA ’23. New York, NY, USA: Association for Computing Machinery, 2023. doi: 10.1145/3588015.3589841.

- K. Angerbauer et al., “Accessibility for Color Vision Deficiencies: Challenges and Findings of a Large Scale Study on Paper Figures,” in Proceedings of the 2022 CHI Conference on Human Factors in Computing Systems, in CHI ’22. New York, NY, USA: Association for Computing Machinery, 2022. doi: 10.1145/3491102.3502133.

- S. Dosdall, K. Angerbauer, L. Merino, M. Sedlmair, and D. Weiskopf, “Toward In-Situ Authoring of Situated Visualization with Chorded Keyboards,” in 15th International Symposium on Visual Information Communication and Interaction, VINCI 2022, Chur, Switzerland, August 16-18, 2022, M. Burch, G. Wallner, and D. Limberger, Eds., ACM, Aug. 2022, pp. 1–5. doi: 10.1145/3554944.3554970.

- G. Tkachev, R. Cutura, M. Sedlmair, S. Frey, and T. Ertl, “Metaphorical Visualization: Mapping Data to Familiar Concepts,” in CHI Conference on Human Factors in Computing Systems Extended Abstracts, ACM, Apr. 2022, pp. 1–10. doi: 10.1145/3491101.3516393.

- K. Klein, M. Sedlmair, and F. Schreiber, “Immersive Analytics: An Overview,” it - Information Technology, vol. 64, pp. 155–168, 2022, doi: 10.1515/itit-2022-0037.

- Q. Q. Ngo, F. L. Dennig, D. A. Keim, and M. Sedlmair, “Machine Learning Meets Visualization – Experiences and Lessons Learned,” it - Information Technology, vol. 64, pp. 169–180, 2022, doi: 10.1515/itit-2022-0034.

- M. Abdelaal, N. D. Schiele, K. Angerbauer, K. Kurzhals, M. Sedlmair, and D. Weiskopf, “Comparative Evaluation of Bipartite, Node-Link, and Matrix-Based Network Representations,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–11, 2022.

- M. Abdelaal, N. D. Schiele, K. Angerbauer, K. Kurzhals, M. Sedlmair, and D. Weiskopf, “Supplemental Materials for: Comparative Evaluation of Bipartite, Node-Link, and Matrix-Based Network Representations.” DaRUS, 2022. [Online]. Available: https://darus.uni-stuttgart.de/citation?persistentId=doi:10.18419/darus-3100

- G. Richer, A. Pister, M. Abdelaal, J.-D. Fekete, M. Sedlmair, and D. Weiskopf, “Scalability in Visualization,” IEEE Transactions on Visualization and Computer Graphics, pp. 1–15, 2022.

- K. Angerbauer and M. Sedlmair, “Toward Inclusion and Accessibility in Visualization Research: Speculations on Challenges, Solution Strategies, and Calls for Action (Position Paper),” in 2022 IEEE Evaluation and Beyond - Methodological Approaches for Visualization (BELIV), Oct. 2022, pp. 20–27. [Online]. Available: https://ieeexplore.ieee.org/document/9978448

- P. Fleck, A. Sousa Calepso, S. Hubenschmid, M. Sedlmair, and D. Schmalstieg, “RagRug: A Toolkit for Situated Analytics,” IEEE Transactions on Visualization and Computer Graphics, 2022, [Online]. Available: https://pubmed.ncbi.nlm.nih.gov/35254986/

- R. Cutura, C. Morariu, Z. Cheng, Y. Wang, D. Weiskopf, and M. Sedlmair, “Hagrid — Gridify Scatterplots with Hilbert and Gosper Curves,” in The 14th International Symposium on Visual Information Communication and Interaction, in VINCI 2021. New York, NY, USA: Association for Computing Machinery, 2021, p. 1:1—1:8. doi: 10.1145/3481549.3481569.

- C. Morariu, A. Bibal, R. Cutura, B. Frénay, and M. Sedlmair, “DumbleDR: Predicting User Preferences of Dimensionality Reduction Projection Quality,” 2021. [Online]. Available: https://arxiv.org/abs/2105.09275

- M. Kraus et al., “Immersive Analytics with Abstract 3D Visualizations: A Survey,” Computer Graphics Forum, 2021, doi: 10.1111/cgf.14430.

- C. Bu et al., “SineStream: Improving the Readability of Streamgraphs by Minimizing Sine Illusion Effects,” IEEE Transactions on Visualization and Computer Graphics, vol. 27, Art. no. 2, 2021, [Online]. Available: https://ieeexplore.ieee.org/document/9222035

- R. Cutura, K. Angerbauer, F. Heyen, N. Hube, and M. Sedlmair, “DaRt: Generative Art using Dimensionality Reduction Algorithms,” in 2021 IEEE VIS Arts Program (VISAP), IEEE, 2021, pp. 59–72. [Online]. Available: https://ieeexplore.ieee.org/document/9622987

- N. Grossmann, J. Bernard, M. Sedlmair, and M. Waldner, “Does the Layout Really Matter? A Study on Visual Model Accuracy Estimation,” in IEEE Visualization Conference (VIS, Short Paper), 2021, pp. 61–65. [Online]. Available: https://arxiv.org/abs/2110.07188

- M. M. Abbas, E. Ullah, A. Baggag, H. Bensmail, M. Sedlmair, and M. Aupetit, “ClustRank: A Visual Quality Measure Trained on Perceptual Data for Sorting Scatterplots by Cluster Patterns,” 2021. [Online]. Available: https://arxiv.org/pdf/2106.00599.pdf

- K. Lu et al., “Palettailor: Discriminable Colorization for Categorical Data,” IEEE Transactions on Visualization & Computer Graphics, vol. 27, Art. no. 2, Feb. 2021, [Online]. Available: https://ieeexplore.ieee.org/document/9222351

- J. Bernard, M. Hutter, M. Sedlmair, M. Zeppelzauer, and T. Munzner, “A Taxonomy of Property Measures to Unify Active Learning and Human-centered Approaches to Data Labeling,” ACM Transactions on Interactive Intelligent Systems (TiiS), vol. 11, pp. 1–42, 2021, doi: 10.1145/3439333.

- C. Krauter, J. Vogelsang, A. Sousa Calepso, K. Angerbauer, and M. Sedlmair, “Don’t Catch It: An Interactive Virtual-Reality Environment to Learn About COVID-19 Measures Using Gamification Elements,” in Mensch und Computer, ACM, 2021, pp. 593–596. doi: 10.1145/3473856.3474031.

- J. Bernard, M. Hutter, M. Zeppelzauer, M. Sedlmair, and T. Munzner, “ProSeCo: Visual analysis of class separation measures and dataset characteristics,” Computers & Graphics, vol. 96, pp. 48–60, 2021, [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0097849321000406

- G. J. Rijken et al., “Illegible Semantics: Exploring the Design Space of Metal Logos,” in IEEE VIS alt.VIS Workshop, 2021. [Online]. Available: https://arxiv.org/abs/2109.01688

- M. Kraus, K. Klein, J. Fuchs, D. A. Keim, F. Schreiber, and M. Sedlmair, “The Value of Immersive Visualization,” IEEE Computer Graphics and Applications (CG&A), vol. 41, Art. no. 4, 2021, doi: 10.1109/MCG.2021.3075258.

- L. Merino, M. Schwarzl, M. Kraus, M. Sedlmair, D. Schmalstieg, and D. Weiskopf, “Evaluating Mixed and Augmented Reality: A Systematic Literature Review (2009 – 2019),” in IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9284762

- P. Balestrucci et al., “Pipelines Bent, Pipelines Broken: Interdisciplinary Self-Reflection on the Impact of COVID-19 on Current and Future Research (Position Paper),” in 2020 IEEE Workshop on Evaluation and Beyond-Methodological Approaches to Visualization (BELIV), IEEE, 2020, pp. 11–18. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9307759

- M. Kraus et al., “Assessing 2D and 3D Heatmaps for Comparative Analysis: An Empirical Study,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, 2020, pp. 546:1–546:14. doi: 10.1145/3313831.3376675.

- A. Streichert, K. Angerbauer, M. Schwarzl, and M. Sedlmair, “Comparing Input Modalities for Shape Drawing Tasks,” in Proceedings of the Symposium on Eye Tracking Research & Applications-Short Papers (ETRA-SP), in ETRA ’20 Short Papers. ACM, 2020, pp. 1–5. doi: 10.1145/3379156.3391830.

- J. Bernard, M. Hutter, M. Zeppelzauer, M. Sedlmair, and T. Munzner, “SepEx: Visual Analysis of Class Separation Measures,” in Proceedings of the International Workshop on Visual Analytics (EuroVA), C. Turkay and K. Vrotsou, Eds., The Eurographics Association, 2020, pp. 1–5. doi: 10.2312/eurova.20201079.

- F. Heyen et al., “ClaVis: An Interactive Visual Comparison System for Classifiers,” in Proceedings of the International Conference on Advanced Visual Interfaces (AVI), in AVI ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 9:1–9:9. doi: 10.1145/3399715.3399814.

- N. Pathmanathan et al., “Eye vs. Head: Comparing Gaze Methods for Interaction in Augmented Reality,” in Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA), ACM, 2020, pp. 50:1–50:5. doi: 10.1145/3379156.3391829.

- M. Kraus et al., “A Comparative Study of Orientation Support Tools in Virtual Reality Environments with Virtual Teleportation,” in 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020, pp. 227–238. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9284697

- K. Kurzhals, F. Göbel, K. Angerbauer, M. Sedlmair, and M. Raubal, “A View on the Viewer: Gaze-Adaptive Captions for Videos,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, 2020, pp. 139:1–139:12. doi: 10.1145/3313831.3376266.

- L. Merino et al., “Toward Agile Situated Visualization: An Exploratory User Study,” in Proceedings of the CHI Conference on Human Factors in Computing Systems-Extended Abstracts (CHI-EA), 2020, pp. LBW087:1–LBW087:7. doi: 10.1145/3334480.3383017.

- S. Öney et al., “Evaluation of Gaze Depth Estimation from Eye Tracking in Augmented Reality,” in Proceedings of the Symposium on Eye Tracking Research & Applications-Short Paper (ETRA-SP), ACM, 2020, pp. 49:1–49:5. doi: 10.1145/3379156.3391835.

- M. Aupetit, M. Sedlmair, M. M. Abbas, A. Baggag, and H. Bensmail, “Toward Perception-based Evaluation of Clustering Techniques for Visual Analytics,” in Proceedings of the IEEE Visualization Conference (VIS), IEEE, 2019, pp. 141–145. [Online]. Available: https://ieeexplore.ieee.org/document/8933620

- Y. Wang et al., “Improving the Robustness of Scagnostics,” IEEE Transactions on Visualization and Computer Graphics, vol. 26, Art. no. 1, 2019, [Online]. Available: https://ieeexplore.ieee.org/document/8807247

- T. Torsney-Weir, S. Afroozeh, M. Sedlmair, and T. Möller, “Risk Fixers and Sweet Spotters: a Study of the Different Approaches to Using Visual Sensitivity Analysis in an Investment Scenario,” in Proceedings of the Eurographics Conference on Visualization (EuroVis), J. Johansson, F. Sadlo, and T. Schreck, Eds., Eurographics Association, 2018, pp. 119–123. doi: 10.5555/3290776.3290801.

- A. C. Valdez, M. Ziefle, and M. Sedlmair, “Priming and Anchoring Effects in Visualization,” IEEE Transactions on Visualization and Computer Graphics, vol. 24, Art. no. 1, 2018, [Online]. Available: https://ieeexplore.ieee.org/document/8022891

- Y. Wang et al., “A Perception-driven Approach to Supervised Dimensionality Reduction for Visualization,” IEEE Transactions on Visualization and Computer Graphics, vol. 24, Art. no. 5, 2018, [Online]. Available: https://www.computer.org/csdl/journal/tg/2018/05/07920403/13rRUEgs2M7

- M. Aupetit and M. Sedlmair, “SepMe: 2002 New Visual Separation Measures.,” in Proceedings of the IEEE Pacific Visualization Symposium (PacificVis), C. Hansen, I. Viola, and X. Yuan, Eds., IEEE, 2016, pp. 1–8. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/7465244

- M. Sedlmair and M. Aupetit, “Data-driven Evaluation of Visual Quality Measures,” Computer Graphics Forum, vol. 34, Art. no. 3, 2015, doi: 10.5555/2858877.2858899.

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.