C02 | Physiologically Based Interaction and Adaptive Visualization

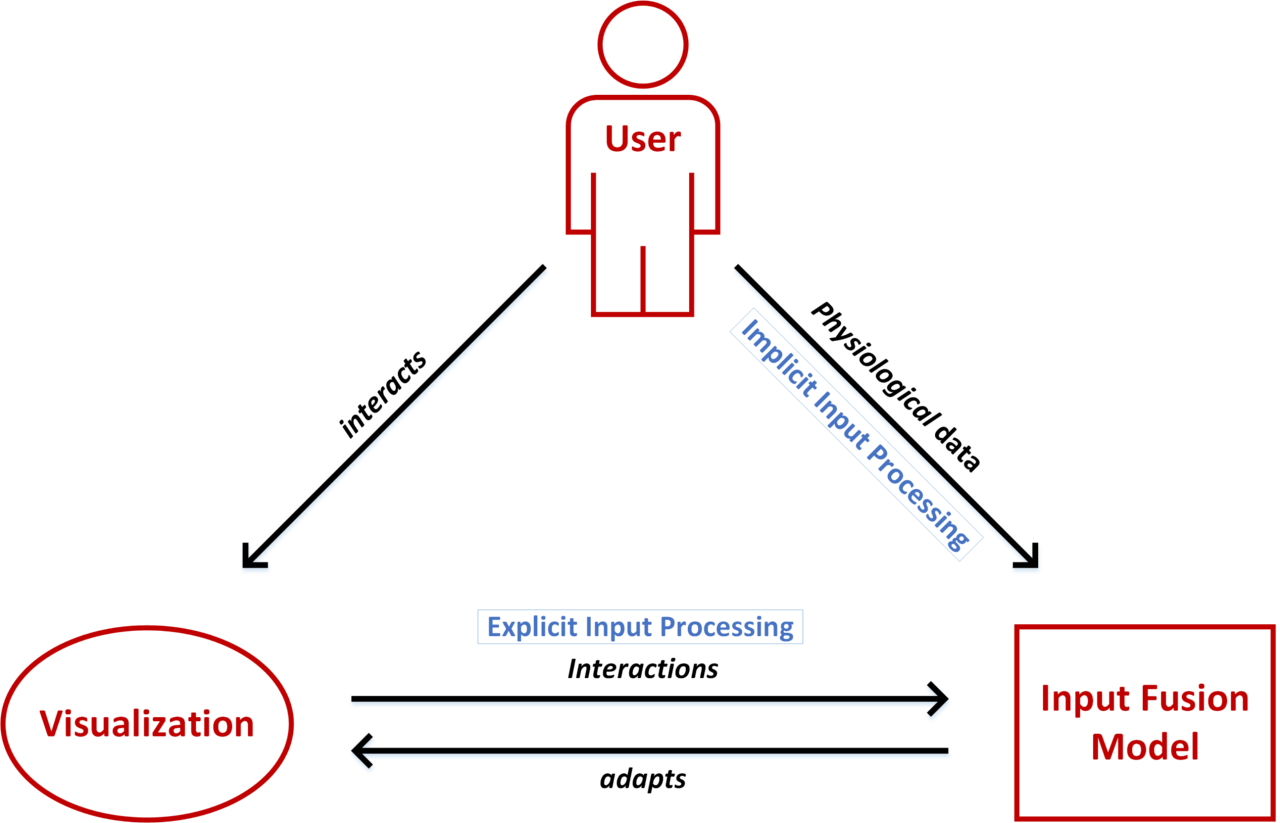

In this project, we research new methods and techniques for cognition-aware visualizations. The basic idea is that a cognition-aware adaptive visualization will observe the physiological response of a person while interacting with a system und use this implicit input. Electrical signals measured on the body (e.g. EEG, EMG, ECG, galvanic skin response), changes of physiological parameters (e.g. body temperature, respiration rate, and pulse) as well as the users’ gaze behavior are used to estimate cognitive load and understanding. Through experimental research, concepts and models for adaptive and dynamic visualizations will be created. Frameworks and tools will be realized and empirically validated.

Research Questions

How can we optimize interactive visualization to create the best possible user experience and to maximize task performance?

To address this, the following questions need to be addressed:

What are relevant quantitative parameters for the user experience in visualization tasks?

How can we estimate these parameters based on physiological measurements?

What forms of adaptation support influencing the experienced cognitive load?

How can these mechanisms be designed and implemented to change and adapt interactive visualizations?

Publications

- T. Kosch, M. Funk, A. Schmidt, and L. L. Chuang, “Identifying Cognitive Assistance with Mobile Electroencephalography: A Case Study with In-Situ Projections for Manual Assembly.,” Proceedings of the ACM on Human-Computer Interaction (ACMHCI), vol. 2, pp. 11:1–11:20, 2018, doi: 10.1145/3229093.

- T. Dingler, R. Rzayev, A. S. Shirazi, and N. Henze, “Designing Consistent Gestures Across Device Types: Eliciting RSVP Controls for Phone, Watch, and Glasses,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, R. L. Mandryk, M. Hancock, M. Perry, and A. L. Cox, Eds., ACM, 2018, pp. 419:1–419:12. doi: 10.1145/3173574.3173993.

- J. Karolus, H. Schuff, T. Kosch, P. W. Woźniak, and A. Schmidt, “EMGuitar: Assisting Guitar Playing with Electromyography,” in Proceedings of the Designing Interactive Systems Conference (DIS), I. Koskinen, Y.-K. Lim, T. C. Pargman, K. K. N. Chow, and W. Odom, Eds., ACM, 2018, pp. 651–655. doi: 10.1145/3196709.3196803.

- J. Karolus, P. W. Woźniak, L. L. Chuang, and A. Schmidt, “Robust Gaze Features for Enabling Language Proficiency Awareness,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, G. Mark, S. R. Fussell, C. Lampe, m. c. schraefel, J. P. Hourcade, C. Appert, and D. Wigdor, Eds., ACM, 2017, pp. 2998–3010. doi: 10.1145/3025453.3025601.

- L. Lischke, S. Mayer, K. Wolf, N. Henze, H. Reiterer, and A. Schmidt, “Screen arrangements and interaction areas for large display work places,” in PerDis ’16 Proceedings of the 5th ACM International Symposium on Pervasive Displays, ACM, Ed., ACM, 2016, pp. 228–234. doi: 10.1145/2914920.2915027.

- D. Weiskopf, M. Burch, L. L. Chuang, B. Fischer, and A. Schmidt, Eye Tracking and Visualization: Foundations, Techniques, and Applications. Berlin, Heidelberg: Springer, 2016. [Online]. Available: https://www.springer.com/de/book/9783319470238

- J. Karolus, P. W. Woźniak, and L. L. Chuang, “Towards Using Gaze Properties to Detect Language Proficiency,” in Proceedings of the 9th Nordic Conference on Human-Computer Interaction (NordiCHI), New York, NY, USA: ACM, 2016, pp. 118:1–118:6. doi: 10.1145/2971485.2996753.

- L. Lischke, V. Schwind, K. Friedrich, A. Schmidt, and N. Henze, “MAGIC-Pointing on Large High-Resolution Displays,” in Proceedings of the CHI Conference on Human Factors in Computing Systems-Extended Abstracts (CHI-EA), ACM, Ed., ACM, 2016, pp. 1706–1712. doi: 10.1145/2851581.2892479.

- N. Flad, J. C. Ditz, A. Schmidt, H. H. Bülthoff, and L. L. Chuang, “Data-Driven Approaches to Unrestricted Gaze-Tracking Benefit from Saccade Filtering,” in Proceedings of the Second Workshop on Eye Tracking and Visualization (ETVIS), M. Burch, L. L. Chuang, and A. T. Duchowski, Eds., IEEE, 2016, pp. 1–5. [Online]. Available: https://ieeexplore.ieee.org/document/7851156

- L. Lischke, P. Knierim, and H. Klinke, “Mid-Air Gestures for Window Management on Large Displays,” in Mensch und Computer 2015 – Tagungsband (MuC), D. G. Oldenbourg, Ed., De Gruyter, 2015, pp. 439–442. doi: 10.1515/9783110443929-072.

- L. Lischke, J. Grüninger, K. Klouche, A. Schmidt, P. Slusallek, and G. Jacucci, “Interaction Techniques for Wall-Sized Screens,” Proceedings of the International Conference on Interactive Tabletops & Surfaces (ITS), pp. 501–504, 2015, doi: 10.1145/2817721.2835071.

Project Group A

Models and Measures

Completed

Project Group B

Adaptive Algorithms

Completed

Project Group C

Interaction

Completed

Project Group D

Applications

Completed

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.