C06 | User-Adaptive Mixed Reality

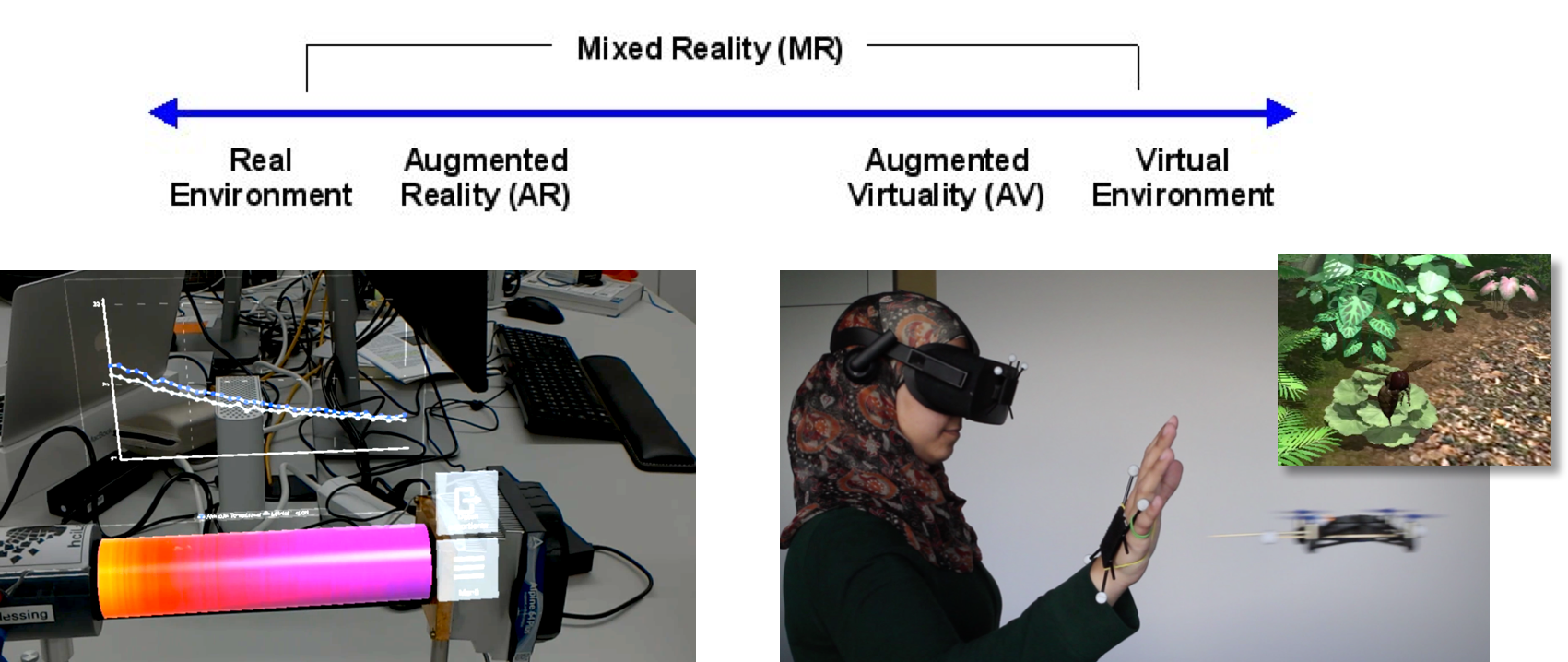

Mixed reality (MR) systems refer the entire broad spectrum that range from physical to virtual reality (VR). It includes instances that overlays virtual content on physical information, i.e. Augmented Reality (AR), as well as those that rely on physical content to increase the realism of virtual environments, i.e. Augmented Virtuality (AV). Such instances tend to be pre-defined for the blend of physical and virtual content.

This project will investigate whether this blend can be adaptive to user states, which are inferred from physiological measurements derived from gaze behavior, peripheral physiology (e.g.., electrodermal activity (EDA); electrocardiograpy (ECG)), and cortical activity (i.e.., electroencephalography (EEG)). In other words, we will investigate the viability and usefulness of MR use scenarios that vary in their blend of virtual and physical content according to the user physiology.

In particular, we intend to investigate how inferred states of user arousal and attention can be leveraged for creating MR scenarios that benefit the user’s ability to process information.

This will build on the acquired expertise and experience of Projects C02 and C03.

The areas of application for MR scenarios are divers. Possible applications would for example be haptic assembly, automated vehicle cockpits, and teamwork analyses of neuroscience and biochemistry datasets.

Research Questions

To what extent can MR systems rely on physiological inputs to infer user state and expectations and, in doing, adapt their visualization in response?

How much information can we provide to users of MR systems, across the various sensory modalities, without resulting in ‘information overload’?

How can users transition between physical and virtual reality and what means should be employed to facilitate this process?

How can computer-supported cooperative work be implemented in a single MR environment that is informed by the physiological inputs of multiple user?

Publications

- F. Draxler, A. Schmidt, and L. L. Chuang, “Relevance, Effort, and Perceived Quality: Language Learners’ Experiences with AI-Generated Contextually Personalized Learning Material,” in Proceedings of the 2023 ACM Designing Interactive Systems Conference, in DIS ’23. New York, NY, USA: Association for Computing Machinery, 2023, pp. 2249–2262. doi: 10.1145/3563657.3596112.

- L. Hirsch, F. Müller, F. Chiossi, T. Benga, and A. M. Butz, “My Heart Will Go On: Implicitly Increasing Social Connectedness by Visualizing Asynchronous Players’ Heartbeats in VR Games,” Proc. ACM Hum.-Comput. Interact., vol. 7, Oct. 2023, doi: 10.1145/3611057.

- T. Kosch, J. Karolus, J. Zagermann, H. Reiterer, A. Schmidt, and P. W. Woźniak, “A Survey on Measuring Cognitive Workload in Human-Computer Interaction,” ACM Comput. Surv., Jan. 2023, doi: 10.1145/3582272.

- C. Schneegass, M. L. Wilson, H. A. Maior, F. Chiossi, A. L. Cox, and J. Wiese, “The Future of Cognitive Personal Informatics,” in Proceedings of the 25th International Conference on Mobile Human-Computer Interaction, in MobileHCI ’23 Companion. New York, NY, USA: Association for Computing Machinery, 2023. doi: 10.1145/3565066.3609790.

- E. Pangratz, F. Chiossi, S. Villa, K. Gramann, and L. Gehrke, “Towards an Implicit Metric of Sensory-Motor Accuracy: Brain Responses to Auditory Prediction Errors in Pianists,” in Proceedings of the 15th Conference on Creativity and Cognition, in C&C ’23. New York, NY, USA: Association for Computing Machinery, 2023, pp. 129–138. doi: 10.1145/3591196.3593340.

- A. Huang, P. Knierim, F. Chiossi, L. L. Chuang, and R. Welsch, “Proxemics for Human-Agent Interaction in Augmented Reality,” in CHI Conference on Human Factors in Computing Systems, 2022, pp. 1–13. doi: 10.1145/3491102.3517593.

- T. Kosch, R. Welsch, L. L. Chuang, and A. Schmidt, “The Placebo Effect of Artificial Intelligence in Human-Computer Interaction,” ACM Transactions on Computer-Human Interaction, 2022, doi: 10.1145/3529225.

- F. Chiossi et al., “Adapting visualizations and interfaces to the user,” it - Information Technology, vol. 64, pp. 133–143, 2022, doi: 10.1515/itit-2022-0035.

- J. Zagermann et al., “Complementary Interfaces for Visual Computing,” it - Information Technology, vol. 64, pp. 145–154, 2022, doi: 10.1515/itit-2022-0031.

- C. Schneegass, V. Füseschi, V. Konevych, and F. Draxler, “Investigating the Use of Task Resumption Cues to Support Learning in Interruption-Prone Environments,” Multimodal Technologies and Interaction, vol. 6, Art. no. 1, 2022, [Online]. Available: https://www.mdpi.com/2414-4088/6/1/2

- D. Dietz et al., “Walk This Beam: Impact of Different Balance Assistance Strategies and Height Exposure on Performance and Physiological Arousal in VR,” in 28th ACM Symposium on Virtual Reality Software and Technology, 2022, pp. 1–12. doi: 10.1145/3562939.3567818.

- F. Chiossi, R. Welsch, S. Villa, L. L. Chuang, and S. Mayer, “Virtual Reality Adaptation Using Electrodermal Activity to Support the User Experience,” Big Data and Cognitive Computing, vol. 6, Art. no. 2, 2022, [Online]. Available: https://www.mdpi.com/2504-2289/6/2/55

- D. Bethge et al., “VEmotion: Using Driving Context for Indirect Emotion Prediction in Real-Time,” in The 34th Annual ACM Symposium on User Interface Software and Technology, New York, NY, USA: Association for Computing Machinery, 2021, pp. 638–651. doi: 10.1145/3472749.3474775.

- F. Draxler, C. Schneegass, J. Safranek, and H. Hussmann, “Why Did You Stop? - Investigating Origins and Effects of Interruptions during Mobile Language Learning,” in Mensch Und Computer 2021, in MuC ’21. New York, NY, USA: Association for Computing Machinery, 2021, pp. 21–33. doi: 10.1145/3473856.3473881.

- F. Draxler, A. Labrie, A. Schmidt, and L. L. Chuang, “Augmented Reality to Enable Users in Learning Case Grammar from Their Real-World Interactions,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, ACM, 2020, pp. 410:1–410:12. doi: 10.1145/3313831.3376537.

- T. Kosch, A. Schmidt, S. Thanheiser, and L. L. Chuang, “One Does Not Simply RSVP: Mental Workload to Select Speed Reading Parameters Using Electroencephalography,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, ACM, 2020, pp. 637:1–637:13. doi: 10.1145/3313831.3376766.

- P. Balestrucci et al., “Pipelines Bent, Pipelines Broken: Interdisciplinary Self-Reflection on the Impact of COVID-19 on Current and Future Research (Position Paper),” in 2020 IEEE Workshop on Evaluation and Beyond-Methodological Approaches to Visualization (BELIV), IEEE, 2020, pp. 11–18. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9307759

- U. Ju, L. L. Chuang, and C. Wallraven, “Acoustic Cues Increase Situational Awareness in Accident Situations: A VR Car-Driving Study,” IEEE Transactions on Intelligent Transportation Systems, pp. 1–11, 2020, [Online]. Available: https://ieeexplore.ieee.org/document/9261134

- T. M. Benz, B. Riedl, and L. L. Chuang, “Projection Displays Induce Less Simulator Sickness than Head-Mounted Displays in a Real Vehicle Driving Simulator,” in Proceedings of the International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI), C. P. Janssen, S. F. Donker, L. L. Chuang, and W. Ju, Eds., ACM, 2019, pp. 379–387. doi: 10.1145/3342197.3344515.

- T. Munz, L. L. Chuang, S. Pannasch, and D. Weiskopf, “VisME: Visual microsaccades explorer,” Journal of Eye Movement Research, vol. 12, Art. no. 6, Dec. 2019, [Online]. Available: https://bop.unibe.ch/JEMR/article/view/JEMR.12.6.5

- C. Glatz, S. S. Krupenia, H. H. Bülthoff, and L. L. Chuang, “Use the Right Sound for the Right Job: Verbal Commands and Auditory Icons for a Task-Management System Favor Different Information Processes in the Brain,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, R. L. Mandryk, M. Hancock, M. Perry, and A. L. Cox, Eds., ACM, 2018, pp. 472:1–472:13. doi: 10.1145/3173574.3174046.

- T. Kosch, M. Funk, A. Schmidt, and L. L. Chuang, “Identifying Cognitive Assistance with Mobile Electroencephalography: A Case Study with In-Situ Projections for Manual Assembly.,” Proceedings of the ACM on Human-Computer Interaction (ACMHCI), vol. 2, pp. 11:1–11:20, 2018, doi: 10.1145/3229093.

- K. Hänsel, R. Poguntke, H. Haddadi, A. Alomainy, and A. Schmidt, “What to Put on the User: Sensing Technologies for Studies and Physiology Aware Systems,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, R. L. Mandryk, M. Hancock, M. Perry, and A. L. Cox, Eds., ACM, 2018, pp. 145:1–145:14. doi: 10.1145/3173574.3173719.

- M. Scheer, H. H. Bülthoff, and L. L. Chuang, “Auditory Task Irrelevance: A Basis for Inattentional Deafness,” Human Factors, vol. 60, Art. no. 3, 2018, doi: 10.1177/0018720818760919.

- T. Dingler, A. Schmidt, and T. Machulla, “Building Cognition-Aware Systems: A Mobile Toolkit for Extracting Time-of-Day Fluctuations of Cognitive Performance,” Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), vol. 1, Art. no. 3, 2017, doi: 10.1145/3132025.

- J. Allsop, R. Gray, H. H. Bülthoff, and L. L. Chuang, “Eye Movement Planning on Single-Sensor-Single-Indicator Displays is Vulnerable to User Anxiety and Cognitive Load,” Journal of Eye Movement Research, vol. 10, Art. no. 5, 2017, doi: 10.16910/jemr.10.5.8.

- J. Karolus, P. W. Woźniak, L. L. Chuang, and A. Schmidt, “Robust Gaze Features for Enabling Language Proficiency Awareness,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, G. Mark, S. R. Fussell, C. Lampe, m. c. schraefel, J. P. Hourcade, C. Appert, and D. Wigdor, Eds., ACM, 2017, pp. 2998–3010. doi: 10.1145/3025453.3025601.

- Y. Abdelrahman, P. Knierim, P. W. Woźniak, N. Henze, and A. Schmidt, “See Through the Fire: Evaluating the Augmentation of Visual Perception of Firefighters Using Depth and Thermal Cameras,” in Proceedings of the ACM International Joint Conference on Pervasive and Ubiquitous Computing and Symposium on Wearable Computers (UbiComp/ISWC), S. C. Lee, L. Takayama, and K. N. Truong, Eds., ACM, 2017, pp. 693–696. doi: 10.1145/3123024.3129269.

- L. L. Chuang, C. Glatz, and S. S. Krupenia, “Using EEG to Understand why Behavior to Auditory In-vehicle Notifications Differs Across Test Environments,” in Proceedings of the International Conference on Automotive User Interfaces and Interactive Vehicular Applications (AutomotiveUI), S. Boll, B. Pfleging, B. Donmez, I. Politis, and D. R. Large, Eds., ACM, 2017, pp. 123–133. doi: 10.1145/3122986.3123017.

- M. Greis, P. El.Agroudy, H. Schuff, T. Machulla, and A. Schmidt, “Decision-Making under Uncertainty: How the Amount of Presented Uncertainty Influences User Behavior,” in Proceedings of the 9th Nordic Conference on Human-Computer Interaction (NordiCHI), ACM, Ed., 2016. doi: 10.1145/2971485.2971535.

- B. Pfleging, D. K. Fekety, A. Schmidt, and A. L. Kun, “A Model Relating Pupil Diameter to Mental Workload and Lighting Conditions,” in Proceedings of the CHI Conference on Human Factors in Computing Systems, J. Kaye, A. Druin, C. Lampe, D. Morris, and J. P. Hourcade, Eds., ACM, 2016, pp. 5776–5788. doi: 10.1145/2858036.2858117.

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.