A05 | Image/Video Quality Assessment: From Test Databases to Similarity-Aware and Perceptual Dynamic Metrics

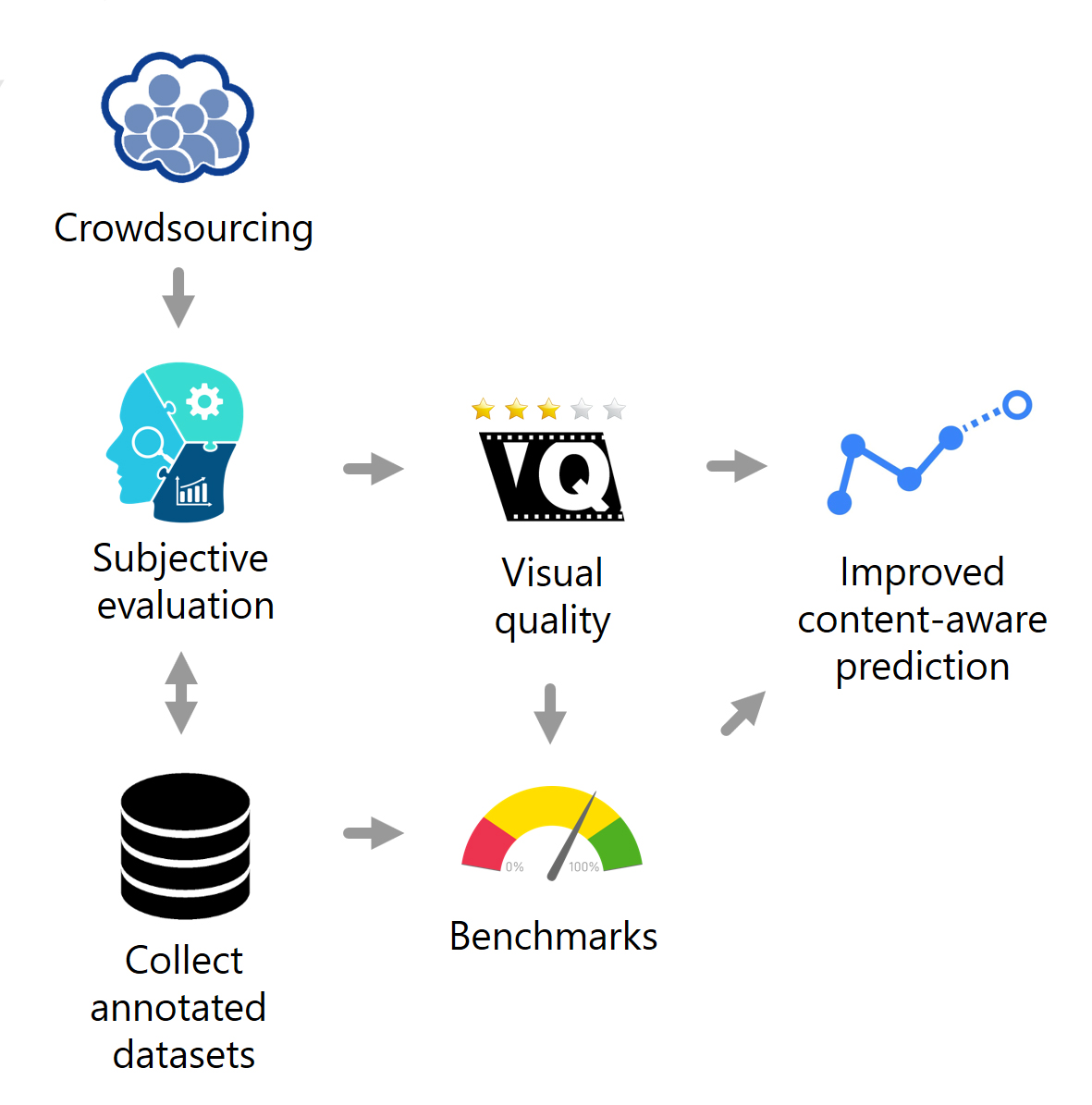

The project addresses methods for automated visual quality assessment and their validation beyond mean opinion scores. We propose to enhance the methods by including similarity awareness and predicted eye movement sequences, quantifying the perceptual viewing experience, and to apply the metrics for quality-aware media processing. Moreover, we will set up and apply media databases that are diverse in content and authentic in the distortions, in contrast to current scientific data sets.

Research Questions

How can crowdsourcing be applied to help generating very large video media data bases for research applications in quality of multimedia?

What is the performance of state-of-the-art video quality assessment methods that were designed based on small training sets for such large and diversified media databases?

Quality assessment in such extremely large empirical studies requires crowdsourcing. How should that be organized to achieve sufficient reliability and efficiency?

Are machine learning techniques suitable to identify the best performing video quality assessment metrics for given media content?

What statistical/perceptual features should be extracted to express similarity for this task?

How can one design new or hybrid strategies for video quality assessment based on the above?

Can we improve methods for image/video quality assessment by studying patterns of human visual attention and other perceptual aspects?

How can knowledge on human visual attention derived from eyetracking studies be incorporated into perceptual image/video quality assessment methods?

How can the quality assessment methods be applied in quality-aware media processing such as perceptual coding?

Publications

- M. Jenadeleh et al., “Fine-Grained HDR Image Quality Assessment From Noticeably Distorted to Very High Fidelity,” in International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2025. doi: 10.48550/arXiv.2506.12505.

- V. Hosu, L. Agnolucci, D. Iso, and D. Saupe, “Image Intrinsic Scale Assessment: Bridging the Gap Between Quality and Resolution,” in International Conference on Computer Vision (ICCV), 2025. doi: 10.48550/arXiv.2502.06476.

- M. Jenadeleh, J. Sneyers, P. Jia, S. Mohammadi, J. Ascenso, and D. Saupe, “Subjective Visual Quality Assessment for High-Fidelity Learning-Based Image Compression,” in International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2025. doi: 10.48550/arXiv.2504.06301.

- D. Saupe and S. H. Del Pin, “Uncovering Cultural Influences on Perceptual Image and Video Quality Assessment through Adaptive Quantized Metric Models,” Journal of Perceptual Imaging, vol. 8, Art. no. 0, 2025, doi: 10.2352/j.percept.imaging.2025.7.000407.

- S. Mohammadi et al., “In-place Double Stimulus Methodology for Subjective Assessment of High Quality Images,” in European Workshop on Visual Information Processing (EUVIP), 2025. doi: 10.48550/arXiv.2508.09777.

- M. Testolina et al., “Fine-Grained Subjective Visual Quality Assessment for High-Fidelity Compressed Images,” in 2025 Data Compression Conference (DCC), IEEE, 2025, pp. 123–132. doi: 10.1109/dcc62719.2025.00020.

- D. Saupe and T. Bleile, “Robustness and Accuracy of MOS with Hard and Soft Outlier Detection,” in International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2025.

- M. Jenadeleh, A. Heß, S. Hviid del Pin, E. Gamboa, M. Hirth, and D. Saupe, “Impact of feedback on crowdsourced visual quality assessment with paired comparisons,” in 2024 16th International Conference on Quality of Multimedia Experience (QoMEX), IEEE, Ed., IEEE, May 2024, pp. 125–131. doi: 10.1109/qomex61742.2024.10598256.

- D. Saupe and S. Hviid del Pin, “National differences in image quality assessment: An investigation on three large-scale IQA datasets,” in 2024 16th International Conference on Quality of Multimedia Experience (QoMEX), IEEE, Ed., IEEE, May 2024, pp. 214–220. doi: 10.1109/qomex61742.2024.10598250.

- V. Hosu, L. Agnolucci, O. Wiedemann, D. Iso, and D. Saupe, “UHD-IQA Benchmark Database: Pushing the Boundaries of Blind Photo Quality Assessment,” in Computer Vision – ECCV 2024 Workshops: Milan, Italy, September 29–October 4, 2024, Proceedings, Part IX., Cham: Springer Nature Switzerland, 2024, pp. 467–482. doi: 10.1007/978-3-031-91838-4_28.

- D. Saupe, K. Rusek, D. Hägele, D. Weiskopf, and L. Janowski, “Maximum Entropy and Quantized Metric Models for Absolute Category Ratings,” IEEE Signal Processing Letters, vol. 31, pp. 2970–2974, 2024, doi: 10.1109/lsp.2024.3480832.

- S. Su et al., “Going the Extra Mile in Face Image Quality Assessment: A Novel Database and Model,” IEEE Transactions on Multimedia, vol. 26, pp. 2671–2685, 2024, doi: 10.1109/tmm.2023.3301276.

- M. Jenadeleh, R. Hamzaoui, U.-D. Reips, and D. Saupe, “Crowdsourced Estimation of Collective Just Noticeable Difference for Compressed Video with the Flicker Test and QUEST+,” IEEE Transactions on Circuits and Systems for Video Technology, p. 1, May 2024, doi: 10.1109/tcsvt.2024.3402363.

- M. Jenadeleh et al., “An Image Quality Dataset with Triplet Comparisons for Multi-dimensional Scaling.” IEEE, pp. 278–281, 2024. doi: 10.1109/qomex61742.2024.10598258.

- G. Chen, H. Lin, O. Wiedemann, and D. Saupe, “Localization of Just Noticeable Difference for Image Compression,” in 2023 15th International Conference on Quality of Multimedia Experience (QoMEX), Jun. 2023, pp. 61–66. doi: 10.1109/QoMEX58391.2023.10178653.

- M. Testolina, V. Hosu, M. Jenadeleh, D. Lazzarotto, D. Saupe, and T. Ebrahimi, “JPEG AIC-3 Dataset: Towards Defining the High Quality to Nearly Visually Lossless Quality Range,” in 15th International Conference on Quality of Multimedia Experience (QoMEX), 2023, pp. 55–60. [Online]. Available: https://ieeexplore.ieee.org/document/10178554

- M. Jenadeleh, J. Zagermann, H. Reiterer, U.-D. Reips, R. Hamzaoui, and D. Saupe, “Relaxed forced choice improves performance of visual quality assessment methods,” in 2023 15th International Conference on Quality of Multimedia Experience (QoMEX), 2023, pp. 37–42. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/10178467

- X. Zhao et al., “CUDAS: Distortion-Aware Saliency Benchmark,” IEEE Access, vol. 11, pp. 58025–58036, Jun. 2023, doi: 10.1109/access.2023.3283344.

- O. Wiedemann, V. Hosu, S. Su, and D. Saupe, “Konx: cross-resolution image quality assessment,” Quality and User Experience, vol. 8, Art. no. 1, Aug. 2023, doi: 10.1007/s41233-023-00061-8.

- J. Lou, H. Lin, D. Marshall, D. Saupe, and H. Liu, “TranSalNet: Towards perceptually relevant visual saliency prediction,” Neurocomputing, vol. 494, pp. 455–467, 2022, [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0925231222004714

- F. Götz-Hahn, V. Hosu, and D. Saupe, “Critical Analysis on the Reproducibility of Visual Quality Assessment Using Deep Features,” PLoS ONE, vol. 17, Art. no. 8, 2022, [Online]. Available: https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0269715

- M. Zameshina et al., “Fairness in generative modeling: do it unsupervised!,” in Proceedings of the Genetic and Evolutionary Computation Conference Companion, ACM, Jul. 2022, pp. 320–323. doi: 10.1145/3520304.3528992.

- H. Lin et al., “Large-Scale Crowdsourced Subjective Assessment of Picturewise Just Noticeable Difference,” IEEE Transactions on Circuits and Systems for Video Technology, vol. 32, Art. no. 9, 2022, [Online]. Available: https://ieeexplore.ieee.org/document/9745537

- H. Lin, H. Men, Y. Yan, J. Ren, and D. Saupe, “Crowdsourced Quality Assessment of Enhanced Underwater Images - a Pilot Study,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, Sep. 2022, pp. 1–4. [Online]. Available: https://ieeexplore.ieee.org/document/9900904

- H. Lin, G. Chen, and F. W. Siebert, “Positional Encoding: Improving Class-Imbalanced Motorcycle Helmet use Classification,” in 2021 IEEE International Conference on Image Processing (ICIP), 2021, pp. 1194–1198. [Online]. Available: https://ieeexplore.ieee.org/document/9506178

- F. Götz-Hahn, V. Hosu, H. Lin, and D. Saupe, “KonVid-150k : A Dataset for No-Reference Video Quality Assessment of Videos in-the-Wild,” IEEE Access, vol. 9, pp. 72139–72160, 2021, doi: 10.1109/ACCESS.2021.3077642.

- H. Men, H. Lin, M. Jenadeleh, and D. Saupe, “Subjective Image Quality Assessment with Boosted Triplet Comparisons,” IEEE Access, vol. 9, pp. 138939–138975, 2021, [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9559922

- S. Su, V. Hosu, H. Lin, Y. Zhang, and D. Saupe, “KonIQ++: Boosting No-Reference Image Quality Assessment in the Wild by Jointly Predicting Image Quality and Defects,” in 32nd British Machine Vision Conference, 2021, pp. 1–12. [Online]. Available: https://www.bmvc2021-virtualconference.com/assets/papers/0868.pdf

- B. Roziere et al., “Tarsier: Evolving Noise Injection in Super-Resolution GANs,” in 2020 25th International Conference on Pattern Recognition (ICPR), 2021, pp. 7028–7035. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9413318

- B. Roziere et al., “EvolGAN: Evolutionary Generative Adversarial Networks,” in Computer Vision -- ACCV 2020, Cham: Springer International Publishing, Nov. 2021, pp. 679–694. [Online]. Available: https://openaccess.thecvf.com/content/ACCV2020/html/Roziere_EvolGAN_Evolutionary_Generative_Adversarial_Networks_ACCV_2020_paper.html

- H. Lin et al., “SUR-FeatNet: Predicting the Satisfied User Ratio Curvefor Image Compression with Deep Feature Learning,” Quality and User Experience, vol. 5, Art. no. 1, 2020, doi: 10.1007/s41233-020-00034-1.

- M. Jenadeleh, M. Pedersen, and D. Saupe, “Blind Quality Assessment of Iris Images Acquired in Visible Light for Biometric Recognition,” Sensors, vol. 20, Art. no. 5, 2020, [Online]. Available: https://www.mdpi.com/1424-8220/20/5/1308

- H. Men, V. Hosu, H. Lin, A. Bruhn, and D. Saupe, “Visual Quality Assessment for Interpolated Slow-Motion Videos Based on a Novel Database,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), 2020, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/document/9123096/authors#authors

- B. Roziere et al., “Evolutionary Super-Resolution,” in Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion, in GECCO ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 151–152. doi: 10.1145/3377929.3389959.

- V. Hosu, H. Lin, T. Szirányi, and D. Saupe, “KonIQ-10k : An Ecologically Valid Database for Deep Learning of Blind Image Quality Assessment,” IEEE Transactions on Image Processing, vol. 29, pp. 4041–4056, 2020, [Online]. Available: https://ieeexplore.ieee.org/document/8968750

- H. Lin, J. D. Deng, D. Albers, and F. W. Siebert, “Helmet Use Detection of Tracked Motorcycles Using CNN-Based Multi-Task Learning,” IEEE Access, vol. 8, pp. 162073–162084, 2020, [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9184871

- V. Hosu et al., “From Technical to Aesthetics Quality Assessment and Beyond: Challenges and Potential,” in Joint Workshop on Aesthetic and Technical Quality Assessment of Multimedia and Media Analytics for Societal Trends, in ATQAM/MAST′20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 19–20. doi: 10.1145/3423268.3423589.

- H. Men, V. Hosu, H. Lin, A. Bruhn, and D. Saupe, “Subjective annotation for a frame interpolation benchmark using artefact amplification,” Quality and User Experience, vol. 5, Art. no. 1, 2020, [Online]. Available: https://link.springer.com/article/10.1007%2Fs41233-020-00037-y

- M. Lan Ha, V. Hosu, and V. Blanz, “Color Composition Similarity and Its Application in Fine-grained Similarity,” in 2020 IEEE Winter Conference on Applications of Computer Vision (WACV), Piscataway, NJ: IEEE, 2020, pp. 2548–2557. [Online]. Available: https://ieeexplore.ieee.org/document/9093522

- T. Guha et al., “ATQAM/MAST′20: Joint Workshop on Aesthetic and Technical Quality Assessment of Multimedia and Media Analytics for Societal Trends,” in Proceedings of the 28th ACM International Conference on Multimedia, in MM ’20. New York, NY, USA: Association for Computing Machinery, 2020, pp. 4758–4760. doi: 10.1145/3394171.3421895.

- H. Lin, M. Jenadeleh, G. Chen, U.-D. Reips, R. Hamzaoui, and D. Saupe, “Subjective Assessment of Global Picture-Wise Just Noticeable Difference,” in Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), 2020, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/document/9106058

- X. Zhao, H. Lin, P. Guo, D. Saupe, and H. Liu, “Deep Learning VS. Traditional Algorithms for Saliency Prediction of Distorted Images,” in 2020 IEEE International Conference on Image Processing (ICIP), 2020, pp. 156–160. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9191203

- O. Wiedemann, V. Hosu, H. Lin, and D. Saupe, “Foveated Video Coding for Real-Time Streaming Applications,” in 2020 Twelfth International Conference on Quality of Multimedia Experience (QoMEX), 2020, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/abstract/document/9123080

- O. Wiedemann and D. Saupe, “Gaze Data for Quality Assessment of Foveated Video,” in ACM Symposium on Eye Tracking Research and Applications, in ETRA ’20 Adjunct. New York, NY, USA: Association for Computing Machinery, 2020. doi: 10.1145/3379157.3391656.

- H. Men, H. Lin, V. Hosu, D. Maurer, A. Bruhn, and D. Saupe, “Visual Quality Assessment for Motion Compensated Frame Interpolation,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2019, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/document/8743221

- V. Hosu, B. Goldlücke, and D. Saupe, “Effective Aesthetics Prediction with Multi-level Spatially Pooled Features,” Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 9367–9375, 2019, [Online]. Available: https://ieeexplore.ieee.org/document/8953497

- C. Fan et al., “SUR-Net: Predicting the Satisfied User Ratio Curve for Image Compression with Deep Learning,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2019, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/document/8743204

- H. Lin, V. Hosu, and D. Saupe, “KADID-10k: A Large-scale Artificially Distorted IQA Database,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2019, pp. 1–3. [Online]. Available: https://ieeexplore.ieee.org/document/8743252

- H. Men, H. Lin, and D. Saupe, “Spatiotemporal Feature Combination Model for No-Reference Video Quality Assessment,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2018, pp. 1–3. [Online]. Available: https://ieeexplore.ieee.org/document/8463426

- D. Varga, D. Saupe, and T. Szirányi, “DeepRN: A Content Preserving Deep Architecture for Blind Image Quality Assessment,” in Proceedings of the IEEE International Conference on Multimedia and Expo (ICME), IEEE, 2018, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/document/8486528

- V. Hosu, H. Lin, and D. Saupe, “Expertise Screening in Crowdsourcing Image Quality,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2018, pp. 276–281. [Online]. Available: https://ieeexplore.ieee.org/document/8463427

- M. Jenadeleh, M. Pedersen, and D. Saupe, “Realtime Quality Assessment of Iris Biometrics Under Visible Light,” in Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPRW), CVPR Workshops, IEEE, 2018, pp. 443–452. [Online]. Available: https://ieeexplore.ieee.org/document/8575548

- M. Spicker, F. Hahn, T. Lindemeier, D. Saupe, and O. Deussen, “Quantifying Visual Abstraction Quality for Stipple Drawings,” in Proceedings of the Symposium on Non-Photorealistic Animation and Rendering (NPAR), ACM, Ed., Association for Computing Machinery, 2017, pp. 8:1–8:10. doi: 10.1145/3092919.3092923.

- U. Gadiraju et al., “Crowdsourcing Versus the Laboratory: Towards Human-centered Experiments Using the Crowd,” D. Archambault, H. Purchase, and T. Hossfeld, Eds., in Information Systems and Applications, incl. Internet/Web, and HCI. , Springer International Publishing, 2017, pp. 6–26.

- S. Egger-Lampl et al., “Crowdsourcing Quality of Experience Experiments,” D. Archambault, H. Purchase, and T. Hossfeld, Eds., in Information Systems and Applications, incl. Internet/Web, and HCI. , Springer International Publishing, 2017, pp. 154–190.

- V. Hosu et al., “The Konstanz natural video database (KoNViD-1k).,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2017, pp. 1–6. [Online]. Available: https://ieeexplore.ieee.org/document/7965673

- V. Hosu, F. Hahn, I. Zingman, and D. Saupe, “Reported Attention as a Promising Alternative to Gaze in IQA Tasks,” in Proceedings of the 5th ISCA/DEGA Workshop on Perceptual Quality of Systems (PQS 2016), 2016, pp. 117–121. [Online]. Available: https://www.isca-speech.org/archive/PQS_2016/abstracts/25.html

- D. Saupe, F. Hahn, V. Hosu, I. Zingman, M. Rana, and S. Li, “Crowd Workers Proven Useful: A Comparative Study of Subjective Video Quality Assessment,” in Proceedings of the International Conference on Quality of Multimedia Experience (QoMEX), 2016, pp. 1–2. [Online]. Available: https://www.uni-konstanz.de/mmsp/pubsys/publishedFiles/SaHaHo16.pdf

- I. Zingman, D. Saupe, O. A. B. Penatti, and K. Lambers, “Detection of Fragmented Rectangular Enclosures in Very High Resolution Remote Sensing Images,” IEEE Transactions on Geoscience and Remote Sensing, vol. 54, Art. no. 8, 2016, [Online]. Available: https://ieeexplore.ieee.org/document/7452408

- V. Hosu, F. Hahn, O. Wiedemann, S.-H. Jung, and D. Saupe, “Saliency-driven Image Coding Improves Overall Perceived JPEG Quality,” in Proceedings of the Picture Coding Symposium (PCS), IEEE, 2016, pp. 1–5. [Online]. Available: https://www.uni-konstanz.de/mmsp/pubsys/publishedFiles/HoHaWi16.pdf

FOR SCIENTISTS

Projects

People

Publications

Graduate School

Equal Opportunity

FOR PUPILS

PRESS AND MEDIA

© SFB-TRR 161 | Quantitative Methods for Visual Computing | 2019.