Y. Wang, Y. Jiang, Z. Hu, C. Ruhdorfer, M. Bâce, and A. Bulling, “VisRecall++: Analysing and Predicting Visualisation Recallability from Gaze Behaviour,”

Proc. ACM on Human-Computer Interaction (PACM HCI), vol. 8, no. ETRA 239, Art. no. ETRA 239, Jul. 2024, doi:

10.1145/3655613.

Abstract

Question answering has recently been proposed as a promising means to assess the recallability of information visualisations.

However, prior works are yet to study the link between visually encoding a visualisation in memory and recall performance.

To fill this gap, we propose VisRecall++ -- a novel 40-participant recallability dataset that contains gaze data on 200 visualisations and 1000 questions, including identifying the title and retrieving values.

We measured recallability by asking participants questions after they observed the visualisation for 10 seconds.

Our analyses reveal several insights, such as saccade amplitude, number of fixations, and fixation duration significantly differ between high and low recallability groups.

Finally, we propose GazeRecallNet -- a novel computational method to predict recallability from gaze behaviour that outperforms the state-of-the-art model RecallNet and three other baselines on this task.

Taken together, our results shed light on assessing recallability from gaze behaviour and inform future work on recallability-based visualisation optimisation.BibTeX

Y. Wang

et al., “SalChartQA: Question-driven Saliency on Information Visualisations,” in

Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI), in Proceedings of the CHI Conference on Human Factors in Computing Systems (CHI). ACM, May 2024, pp. 1--14. doi:

10.1145/3613904.3642942.

Abstract

Understanding the link between visual attention and users' information needs when visually exploring information visualisations is under-explored due to a lack of large and diverse datasets to facilitate these analyses. To fill this gap we introduce SalChartQA -- a novel crowd-sourced dataset that uses the BubbleView interface to track user attention and a question-answering (QA) paradigm to induce different information needs in users. SalChartQA contains 74,340 answers to 6,000 questions on 3,000 visualisations.

Informed by our analyses demonstrating the close correlation between information needs and visual saliency, we propose the first computational method to predict question-driven saliency on visualisations.

Our method outperforms state-of-the-art saliency models for several metrics, such as the correlation coefficient and the Kullback-Leibler divergence. These results show the importance of information needs for shaping attentive behaviour and pave the way for new applications, such as task-driven optimisation of visualisations or explainable AI in chart question-answering.BibTeX

Y. Wang, Q. Dai, M. Bâce, K. Klein, and A. Bulling, “Saliency3D: a 3D Saliency Dataset Collected on Screen,” in

Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA), in Proc. ACM International Symposium on Eye Tracking Research and Applications (ETRA). ACM, 2024, pp. 1--6. doi:

10.1145/3649902.3653350.

Abstract

While visual saliency has recently been studied in 3D, the experimental setup for collecting 3D saliency data can be expensive and cumbersome. To address this challenge, we propose a novel experimental design that utilises an eye tracker on a screen to collect 3D saliency data, which could reduce the cost and complexity of data collection. We first collected gaze data on a computer screen and then mapped the 2D points to 3D saliency data through perspective transformation. Using this method, we propose Saliency3D, a 3D saliency dataset (49,276 fixations) comprising 10 participants looking at sixteen objects. We examined the viewing preferences for objects and our results indicate potential preferred viewing directions and a correlation between salient features and the variation in viewing directions.BibTeX

E. Sood, L. Shi, M. Bortoletto, Y. Wang, P. Müller, and A. Bulling, “Improving Neural Saliency Prediction with a Cognitive Model of Human Visual Attention,” in

Proceedings of the 45th Annual Meeting of the Cognitive Science Society (CogSci), in Proceedings of the 45th Annual Meeting of the Cognitive Science Society (CogSci). Jul. 2023, pp. 3639–3646. [Online]. Available:

https://escholarship.org/uc/item/5968p71mAbstract

We present a novel method for saliency prediction that leverages a cognitive model of visual attention as an inductive bias. This approach is in stark contrast to recent purely data-driven saliency models that achieve performance improvements mainly by increased capacity, resulting in high computational costs and the need for large-scale training datasets. We demonstrate that by using a cognitive model, our method achieves competitive performance to the state of the art across several natural image datasets while only requiring a fraction of the parameters. Furthermore, we set the new state of the art for saliency prediction on information visualizations, demonstrating the effectiveness of our approach for cross-domain generalization. We further provide augmented versions of the full MSCOCO dataset with synthetic gaze data using the cognitive model, which we used to pre-train our method. Our results are highly promising and underline the significant potential of bridging between cognitive and data-driven models, potentially also beyond attention.BibTeX

Y. Wang, M. Bâce, and A. Bulling, “Scanpath Prediction on Information Visualisations,”

IEEE Transactions on Visualization and Computer Graphics, pp. 1--15, Feb. 2023, doi:

10.1109/TVCG.2023.3242293.

Abstract

We propose Unified Model of Saliency and Scanpaths (UMSS)-a model that learns to predict multi-duration saliency and scanpaths (i.e. sequences of eye fixations) on information visualisations. Although scanpaths provide rich information about the importance of different visualisation elements during the visual exploration process, prior work has been limited to predicting aggregated attention statistics, such as visual saliency. We present in-depth analyses of gaze behaviour for different information visualisation elements (e.g. Title, Label, Data) on the popular MASSVIS dataset. We show that while, overall, gaze patterns are surprisingly consistent across visualisations and viewers, there are also structural differences in gaze dynamics for different elements. Informed by our analyses, UMSS first predicts multi-duration element-level saliency maps, then probabilistically samples scanpaths from them. Extensive experiments on MASSVIS show that our method consistently outperforms state-of-the-art methods with respect to several, widely used scanpath and saliency evaluation metrics. Our method achieves a relative improvement in sequence score of 11.5% for scanpath prediction, and a relative improvement in Pearson correlation coefficient of up to 23.6 These results are auspicious and point towards richer user models and simulations of visual attention on visualisations without the need for any eye tracking equipment.BibTeX

Y. Wang, M. Koch, M. Bâce, D. Weiskopf, and A. Bulling, “Impact of Gaze Uncertainty on AOIs in Information Visualisations,” in

2022 Symposium on Eye Tracking Research and Applications, in 2022 Symposium on Eye Tracking Research and Applications. ACM, Jun. 2022, pp. 1–6. doi:

10.1145/3517031.3531166.

Abstract

Gaze-based analysis of areas of interest (AOI) is widely used in information visualisation research to understand how people explore visualisations or assess the quality of visualisations concerning key characteristics such as memorability. However, nearby AOIs in visualisations amplify the uncertainty caused by the gaze estimation error, which strongly influences the mapping between gaze samples or fixations and different AOIs. We contribute a novel investigation into gaze uncertainty and quantify its impact on AOI-based analysis on visualisations using two novel metrics: the Flipping Candidate Rate (FCR) and Hit Any AOI Rate (HAAR). Our analysis of 40 real-world visualisations, including human gaze and AOI annotations, shows that uncertainty commonly appears in visualisations, which significantly impacts the analysis conducted in AOI-based studies. Moreover, we analysed four visualisation types and found that bar and scatter plots are commonly designed in a way that causes more uncertainty than line and pie plots in gaze-based analysis.BibTeX

Y. Wang, C. Jiao, M. Bâce, and A. Bulling, “VisRecall: Quantifying Information Visualisation Recallability Via Question Answering,”

IEEE Transactions on Visualization and Computer Graphics, vol. 28, no. 12, Art. no. 12, 2022, doi:

10.1109/TVCG.2022.3198163.

Abstract

Despite its importance for assessing the effectiveness of communicating information visually, fine-grained recallability of information visualisations has not been studied quantitatively so far. In this work, we propose a question-answering paradigm to study visualisation recallability and present VisRecall - a novel dataset consisting of 200 visualisations that are annotated with crowd-sourced human (N = 305) recallability scores obtained from 1,000 questions of five question types. Furthermore, we present the first computational method to predict recallability of different visualisation elements, such as the title or specific data values. We report detailed analyses of our method on VisRecall and demonstrate that it outperforms several baselines in overall recallability and FE-, F-, RV-, and U-question recallability. Our work makes fundamental contributions towards a new generation of methods to assist designers in optimising visualisations.BibTeX

F. Chiossi

et al., “Adapting visualizations and interfaces to the user,”

it - Information Technology, vol. 64, no. 4–5, Art. no. 4–5, 2022, doi:

10.1515/itit-2022-0035.

Abstract

Adaptive visualization and interfaces pervade our everyday tasks to improve interaction from the point of view of user performance and experience. This approach allows using several user inputs, whether physiological, behavioral, qualitative, or multimodal combinations, to enhance the interaction. Due to the multitude of approaches, we outline the current research trends of inputs used to adapt visualizations and user interfaces. Moreover, we discuss methodological approaches used in mixed reality, physiological computing, visual analytics, and proficiency-aware systems. With this work, we provide an overview of the current research in adaptive systems.BibTeX

K. Kurzhals

et al., “Visual Analytics and Annotation of Pervasive Eye Tracking Video,” in

Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA), in Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA). Stuttgart, Germany: ACM, 2020, pp. 16:1-16:9. doi:

10.1145/3379155.3391326.

Abstract

We propose a new technique for visual analytics and annotation of long-term pervasive eye tracking data for which a combined analysis of gaze and egocentric video is necessary. Our approach enables two important tasks for such data for hour-long videos from individual participants: (1) efficient annotation and (2) direct interpretation of the results. Exemplary time spans can be selected by the user and are then used as a query that initiates a fuzzy search of similar time spans based on gaze and video features. In an iterative refinement loop, the query interface then provides suggestions for the importance of individual features to improve the search results. A multi-layered timeline visualization shows an overview of annotated time spans. We demonstrate the efficiency of our approach for analyzing activities in about seven hours of video in a case study and discuss feedback on our approach from novices and experts performing the annotation task.BibTeX

H. Sattar, A. Bulling, and M. Fritz, “Predicting the Category and Attributes of Visual Search Targets Using Deep Gaze Pooling,” in

Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW), in Proceedings of the IEEE International Conference on Computer Vision Workshops (ICCVW). 2017, pp. 2740–2748. doi:

10.1109/ICCVW.2017.322.

Abstract

Predicting the target of visual search from human gaze data is a challenging problem. In contrast to previous work that focused on predicting specific instances of search targets, we propose the first approach to predict a target's category and attributes. However, state-of-the-art models for categorical recognition require large amounts of training data, which is prohibitive for gaze data. We thus propose a novel Gaze Pooling Layer that integrates gaze information and CNN-based features by an attention mechanism - incorporating both spatial and temporal aspects of gaze behaviour. We show that our approach can leverage pre-trained CNN architectures, thus eliminating the need for expensive joint data collection of image and gaze data. We demonstrate the effectiveness of our method on a new 14 participant dataset, and indicate directions for future research in the gaze-based prediction of mental states.BibTeX

M. Tonsen, J. Steil, Y. Sugano, and A. Bulling, “InvisibleEye: Mobile Eye Tracking Using Multiple Low-Resolution Cameras and Learning-Based Gaze Estimation,” in

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), in Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (IMWUT), vol. 1. 2017, pp. 106:1-106:21. doi:

https://doi.org/10.1145/3130971.

Abstract

Analysis of everyday human gaze behaviour has significant potential for ubiquitous computing, as evidenced by a large body of work in gaze-based human-computer interaction, attentive user interfaces, and eye-based user modelling. However, current mobile eye trackers are still obtrusive, which not only makes them uncomfortable to wear and socially unacceptable in daily life, but also prevents them from being widely adopted in the social and behavioural sciences. To address these challenges we present InvisibleEye, a novel approach for mobile eye tracking that uses millimetre-size RGB cameras that can be fully embedded into normal glasses frames. To compensate for the cameras’ low image resolution of only a few pixels, our approach uses multiple cameras to capture different views of the eye, as well as learning-based gaze estimation to directly regress from eye images to gaze directions. We prototypically implement our system and characterise its performance on three large-scale, increasingly realistic, and thus challenging datasets: 1) eye images synthesised using a recent computer graphics eye region model, 2) real eye images recorded of 17 participants under controlled lighting, and 3) eye images recorded of four participants over the course of four recording sessions in a mobile setting. We show that InvisibleEye achieves a top person-specific gaze estimation accuracy of 1.79° using four cameras with a resolution of only 5 × 5 pixels. Our evaluations not only demonstrate the feasibility of this novel approach but, more importantly, underline its significant potential for finally realising the vision of invisible mobile eye tracking and pervasive attentive user interfaces.BibTeX

X. Zhang, Y. Sugano, and A. Bulling, “Everyday Eye Contact Detection Using Unsupervised Gaze Target Discovery,” in

Proceedings of the ACM Symposium on User Interface Software and Technology (UIST), in Proceedings of the ACM Symposium on User Interface Software and Technology (UIST). 2017, pp. 193–203. doi:

10.1145/3126594.3126614.

Abstract

Eye contact is an important non-verbal cue in social signal processing and promising as a measure of overt attention in human-object interactions and attentive user interfaces. However, robust detection of eye contact across different users, gaze targets, camera positions, and illumination conditions is notoriously challenging. We present a novel method for eye contact detection that combines a state-of-the-art appearance-based gaze estimator with a novel approach for unsupervised gaze target discovery, i.e. without the need for tedious and time-consuming manual data annotation. We evaluate our method in two real-world scenarios: detecting eye contact at the workplace, including on the main work display, from cameras mounted to target objects, as well as during everyday social interactions with the wearer of a head-mounted egocentric camera. We empirically evaluate the performance of our method in both scenarios and demonstrate its effectiveness for detecting eye contact independent of target object type and size, camera position, and user and recording environment.BibTeX

X. Zhang, Y. Sugano, M. Fritz, and A. Bulling, “MPIIGaze: Real-World Dataset and Deep Appearance-Based Gaze Estimation,”

IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 41, no. 1, Art. no. 1, 2017, doi:

10.1109/TPAMI.2017.2778103.

BibTeX

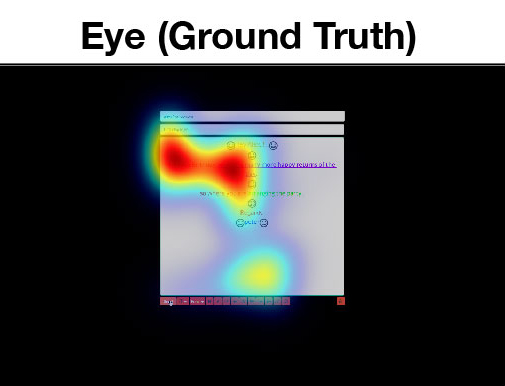

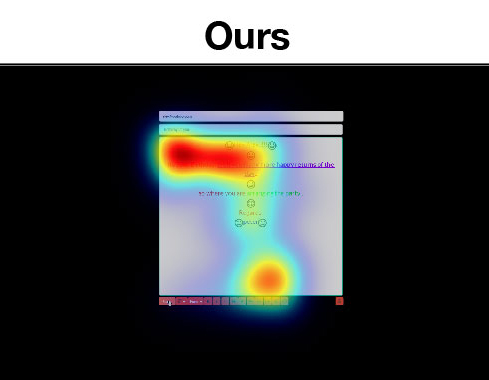

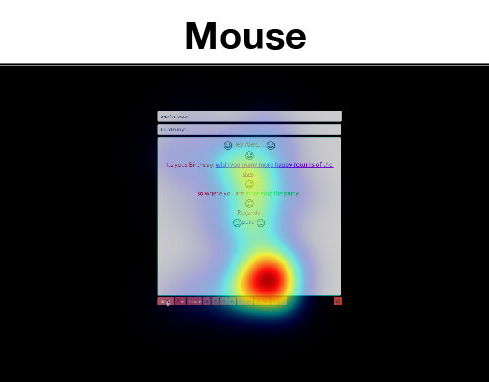

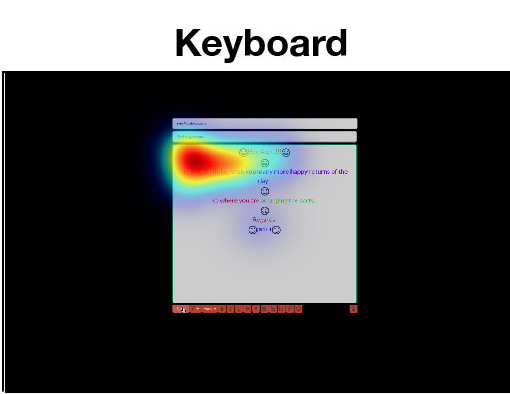

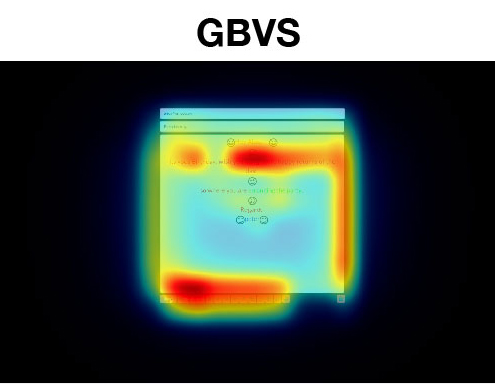

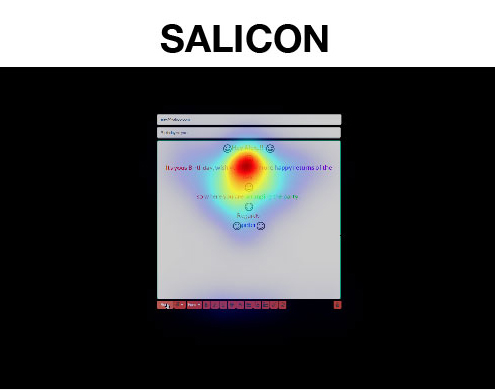

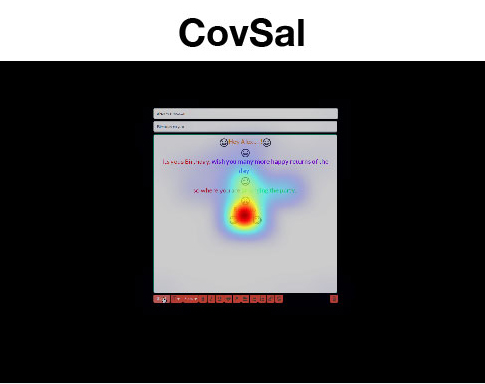

P. Xu, Y. Sugano, and A. Bulling, “Spatio-Temporal Modeling and Prediction of Visual Attention in Graphical User Interfaces,” in

Proceedings of the CHI Conference on Human Factors in Computing Systems, in Proceedings of the CHI Conference on Human Factors in Computing Systems. 2016, pp. 3299–3310. doi:

10.1145/2858036.2858479.

Abstract

We present a computational model to predict users' spatio-temporal visual attention on WIMP-style (windows, icons, menus, pointer) graphical user interfaces. Like existing models of bottom-up visual attention in computer vision, our model does not require any eye tracking equipment. Instead, it predicts attention solely using information available to the interface, specifically users' mouse and keyboard input as well as the UI components they interact with. To study our model in a principled way, we further introduce a method to synthesize user interface layouts that are functionally equivalent to real-world interfaces, such as from Gmail, Facebook, or GitHub. We first quantitatively analyze attention allocation and its correlation with user input and UI components using ground-truth gaze, mouse, and keyboard data of 18 participants performing a text editing task. We then show that our model predicts attention maps more accurately than state-of-the-art methods. Our results underline the significant potential of spatio-temporal attention modeling for user interface evaluation, optimization, or even simulation.BibTeX

E. Wood, T. Baltrusaitis, L.-P. Morency, P. Robinson, and A. Bulling, “A 3D Morphable Eye Region Model for Gaze Estimation,” in

Proceedings of the European Conference on Computer Vision (ECCV), in Proceedings of the European Conference on Computer Vision (ECCV). 2016, pp. 297–313. doi:

10.1007/978-3-319-46448-0_18.

Abstract

Morphable face models are a powerful tool, but have previously failed to model the eye accurately due to complexities in its material and motion. We present a new multi-part model of the eye that includes a morphable model of the facial eye region, as well as an anatomy-based eyeball model. It is the first morphable model that accurately captures eye region shape, since it was built from high-quality head scans. It is also the first to allow independent eyeball movement, since we treat it as a separate part. To showcase our model we present a new method for illumination- and head-pose–invariant gaze estimation from a single RGB image. We fit our model to an image through analysis-by-synthesis, solving for eye region shape, texture, eyeball pose, and illumination simultaneously. The fitted eyeball pose parameters are then used to estimate gaze direction. Through evaluation on two standard datasets we show that our method generalizes to both webcam and high-quality camera images, and outperforms a state-of-the-art CNN method achieving a gaze estimation accuracy of 9.44∘ in a challenging user-independent scenario.BibTeX

E. Wood, T. Baltrusaitis, L.-P. Morency, P. Robinson, and A. Bulling, “Learning an Appearance-Based Gaze Estimator from One Million Synthesised Images,” in

Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA), in Proceedings of the Symposium on Eye Tracking Research & Applications (ETRA). 2016, pp. 131–138. doi:

10.1145/2857491.2857492.

Abstract

Learning-based methods for appearance-based gaze estimation achieve state-of-the-art performance in challenging real-world settings but require large amounts of labelled training data. Learning-by-synthesis was proposed as a promising solution to this problem but current methods are limited with respect to speed, appearance variability, and the head pose and gaze angle distribution they can synthesize. We present UnityEyes, a novel method to rapidly synthesize large amounts of variable eye region images as training data. Our method combines a novel generative 3D model of the human eye region with a real-time rendering framework. The model is based on high-resolution 3D face scans and uses real-time approximations for complex eyeball materials and structures as well as anatomically inspired procedural geometry methods for eyelid animation. We show that these synthesized images can be used to estimate gaze in difficult in-the-wild scenarios, even for extreme gaze angles or in cases in which the pupil is fully occluded. We also demonstrate competitive gaze estimation results on a benchmark in-the-wild dataset, despite only using a light-weight nearest-neighbor algorithm. We are making our UnityEyes synthesis framework available online for the benefit of the research community.BibTeX

X. Zhang, Y. Sugano, M. Fritz, and A. Bulling, “It’s Written All Over Your Face: Full-Face Appearance-Based Gaze Estimation,” in

Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). 2016, pp. 2299–2308. doi:

10.1109/CVPRW.2017.284.

BibTeX